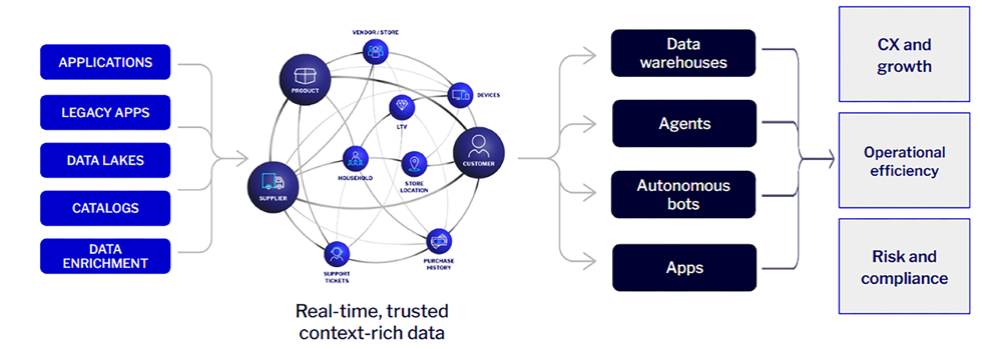

Agentic AI will transform how work gets done—but only if it runs on trusted, unified real-time data. The leaders are building that foundation now. AI agents are moving from hype to reality, promising to automate decisions and workflows without constant oversight—but speed and autonomy mean nothing if they run on bad data. Gartner warns that by 2026, 60% of AI projects without an AI-ready data foundation will be abandoned, yet 63% of data leaders admit they lack—or aren’t sure they have—the right data practices. The gap between traditional data management and AI-ready data is where initiatives fail, and where the real work to unlock agentic AI must begin. Data silos: Yesterday’s problem, today’s AI risk One of the biggest obstacles to the agentic future? Data silos. In an ideal world, all enterprise data would be seamlessly unified and accessible in real time—a vision promised decades ago with the arrival of data warehouses and repeated with every new wave of data platforms. But the reality is harsher. Especially for companies with legacy systems and decades of accumulated tech debt, data remains scattered across functions, geographies, and applications. In the age of agentic AI, those silos aren’t just an inconvenience—they’re a direct threat to performance. An AI agent that can’t see the complete, current picture will make decisions based on partial truths, eroding trust and compounding errors at scale. Breaking those silos isn’t just an IT exercise—it’s a strategic imperative. AI agents thrive on connected, trusted, real-time data that flows freely across the organization and its ecosystem. When every decision—human or machine—is informed by the same up-to-date, context-rich intelligence, errors shrink, efficiency grows, and the speed of action becomes a competitive weapon. This requires rethinking data architecture from the ground up. Instead of treating data as a collection of static repositories, it must become a living, interoperable network—one that updates continuously, scales easily, and speaks a common semantic language so every agent, application, and analyst is working from the same definitions. Figure 1: The future with AI in the enterprise Leaders who make this shift now will have a decisive advantage: the ability to deploy AI agents that not only act quickly, but act correctly—accelerating innovation, sharpening decision-making, and building trust with every automated interaction. Those who wait will be left with fragmented pilots and missed opportunities, watching competitors pull further ahead. Trusted data: The gatekeeper to agentic AI In the history of enterprise technology, few shifts carry as much transformative potential—and as much risk—as the rise of agentic AI. These systems don’t just assist users; they reason, decide, and act. Across industries, they are already reshaping how decisions are made, how work gets done, and how businesses engage with customers, partners, and markets. But here’s the real challenge: agentic AI doesn’t need more data—it needs trusted data, delivered at the speed and scale every enterprise is striving to reach. Research shows that while most leaders recognize the promise of agentic AI, only a fraction feel truly prepared to capture its value. What’s holding them back? The trusted data foundation that underpins business operations. Agentic AI cannot succeed without a shift in how organizations unify, govern, and operationalize their data. It’s not enough to have a data lake or a dashboard. These systems require context-rich, relationship-aware data delivered in milliseconds—data that reflects transactions, interactions, and dependencies across the enterprise, not just static records in isolation. For organizations serious about scaling agentic AI responsibly, the first step isn’t deploying the agent—it’s building the semantic data layer that ensures every decision is based on accurate, complete, and current information. Without that foundation, automation becomes risky. With it, agentic AI can operate at full potential: fast, safe, and aligned with business goals. Act before the agents arrive Agentic AI is not a distant concept—it’s here, and it’s maturing quickly. The companies that will lead in the Age of Intelligence are those building their trusted, real-time data backbones today. Delay, and you risk deploying fast, capable AI agents that make bad decisions faster than you can correct them. Act now, and you position your organization to operate at the speed, scale, and confidence the future demands. For leaders ready to move from vision to execution, our white paper 10 Data Rules to Win in the Age of Intelligence, offers a strategic blueprint for success. It offers a practical framework for architecting the unified, trusted, and interoperable data foundation that agentic AI demands. Read it, share it, and start building the intelligence layer your business will rely on for the next decade. source