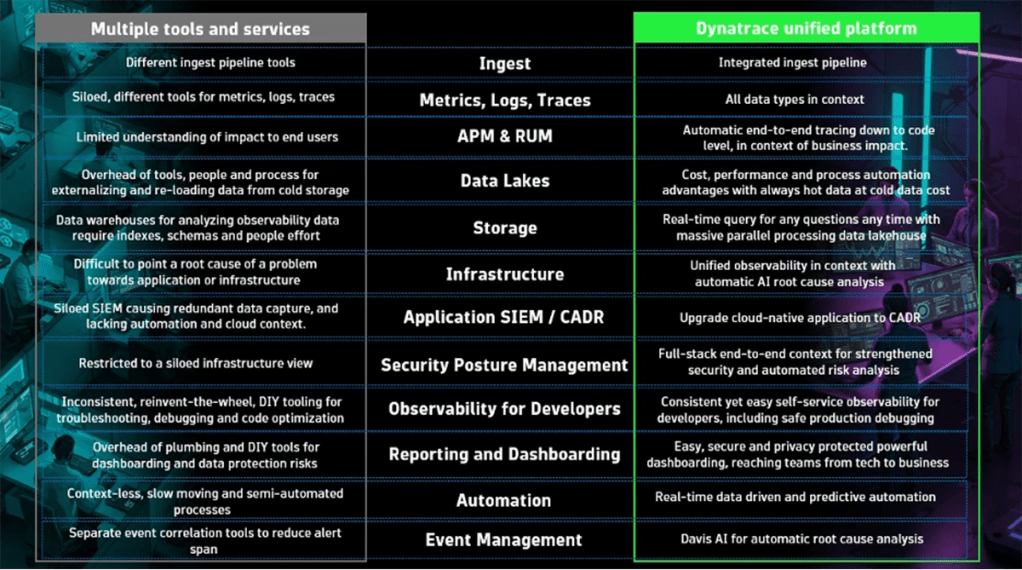

Almost daily, teams have requests for new tools—for database management, CI/CD, security, and collaboration—to address specific needs. Increasingly, those tools involve AI capabilities to potentially boost productivity and automate routine tasks. But proliferating tools across different teams for different uses can also balloon costs, introduce operational inefficiency, increase complexity, and actually break collaboration. Moreover, tool sprawl can increase risks for reliability, security, and compliance. As an executive, I am always seeking simplicity and efficiency to make sure the architecture of the business is as streamlined as possible. Here are five strategies executives can pursue to reduce tool sprawl, lower costs, and increase operational efficiency. Key insights for executives: Increase operational efficiency with automation and AI to foster seamless collaboration: With AI and automated workflows, teams work from shared data, automate repetitive tasks, and accelerate resolution—focusing more on business outcomes. Unify tools to eliminate redundancies, rein in costs, and ease compliance: This not only lowers the total cost of ownership but also simplifies regulatory audits and improves software quality and security. Break data silos and add context for faster, more strategic decisions: Unifying metrics, logs, traces, and user behavior within a single platform enables real-time decisions rooted in full context, not guesswork. Minimize security risks by reducing complexity with unified observability: Converging security with end-to-end observability gives security teams the deep, real-time context they need to strengthen security posture and accelerate detection and response in complex cloud environments. Simplify data ingestion and up-level storage for better, faster querying: With Dynatrace, petabytes of data are always ”hot” for real-time insights, at a “cold” cost. No delays and overhead of reindexing and rehydration. 1. Increase operational efficiency to foster seamless collaboration ❌ Reinventing the wheel: One of the biggest challenges organizations face is connecting all the dots so teams can take swift action that’s meaningful to the business. Too many signals from point solutions and DIY tools spread across multiple teams hinder collaboration. Moreover, inconsistency in the tech stack and a lack of enterprise-ready integration and authentication approaches means teams must reinvent the wheel, forcing repeated builds and solving the same problems, instead of focusing on delivering business goals. ✅ Automate and collaborate on answers from data: By uniting data from across the organization in a single platform, teams can focus on making faster, high-quality decisions in a shared context. With AI they can trust, teams can understand the real-time context of digital services, enabling automation that can predict and prevent issues before they occur, such as service-level violations or third-party software vulnerabilities. The Dynatrace AutomationEngine orchestrates workflows across teams to implement automated remediations, while with AppEngine, teams can tailor solutions to meet custom needs without creating silos. 2. Unify tools to rein in costs and ease compliance ❌ High costs: Organizations often feel the pain of tool sprawl first in the pocketbook. Multiple tools increase the total cost of ownership through the sum of license fees, reduced negotiation power, and redundant maintenance and operations efforts. For example, organizations typically utilize only 60% of their security tools. Too many tools and DIY solutions also complicate regulatory compliance and make integrations harder, which reduces agility and drives up costs through wasted time. ✅ Business-focused, unified platform approach: A unified platform approach enables platform engineering and self-service portals, simplifying operations and reducing costs. The Dynatrace AI-powered unified platform has been recognized for its ability to not only streamline operations and reduce costs but also to provide better, faster data analysis. Standardizing platforms minimizes inconsistencies, eases regulatory compliance, and enhances software quality and security. Dynatrace integrates application performance monitoring (APM), infrastructure monitoring, and real-user monitoring (RUM) into a single platform, with its Foundation & Discovery mode offering a cost-effective, unified view of the entire infrastructure, including non-critical applications previously monitored using legacy APM tools. 3. Break data silos and add context for faster, more strategic decisions ❌ Data silos: When every team adopts their own toolset, organizations wind up with different query technologies, heterogeneous datatypes, and incongruous storage speeds. Last year Dynatrace research revealed that the average multi-cloud environment spans 12 different platforms and services, exacerbating the issue of data silos. Worsened by separate tools to track metrics, logs, traces, and user behavior—crucial, interconnected details are separated into different storage. It becomes practically impossible for teams to stitch them back together to get quick answers in context and make strategic decisions. ✅ All data in context: By bringing together metrics, logs, traces, user behavior, and security events into one platform, Dynatrace eliminates silos and delivers real-time, end-to-end visibility. The Smartscape® topology map automatically tracks every component and dependency, offering precise observability across the entire stack. Davis®, the causal AI engine, instantly identifies root causes and predicts service degradation before it impacts users. Generative AI enhances response speed and clarity, accelerating incident resolution and boosting team productivity. Fully contextualized data enables faster, more strategic decisions, without jumping between tools or waiting on correlation across teams. This unified approach gives teams trustworthy, real-time answers, which is critical for navigating today’s complex digital ecosystem. 4. Strengthen security with unified observability ❌ On average, organizations rely on 10 different observability solutions and nearly 100 different security tools to manage their applications, infrastructure, and user experience. Traditional network-based security approaches are evolving. Enhanced security measures, such as encryption and zero-trust, are making it increasingly difficult to analyze security threats using network packets. This shift is forcing security teams to focus instead on the application layer. While network security remains relevant, the emphasis is now on application observability and threat detection. As a result, many organizations are facing the burden of managing separate systems for network security and application observability, leading to redundant configurations, duplicated data collection, and operational overhead. ✅ The convergence of security and observability tools is becoming essential, especially for cloud- and AI-native projects, as traditional network-based security approaches evolve. Platforms such as Dynatrace address these challenges by combining security and observability into a single platform. This integration eliminates the need for separate data collection, transfer, configuration, storage, and analytics, streamlining operations and reducing costs. From a security risk mitigation perspective, integrating security and observability not only