Don’t Fire Your Developers! What AI-Enhanced Software Development Means For Technology Executives

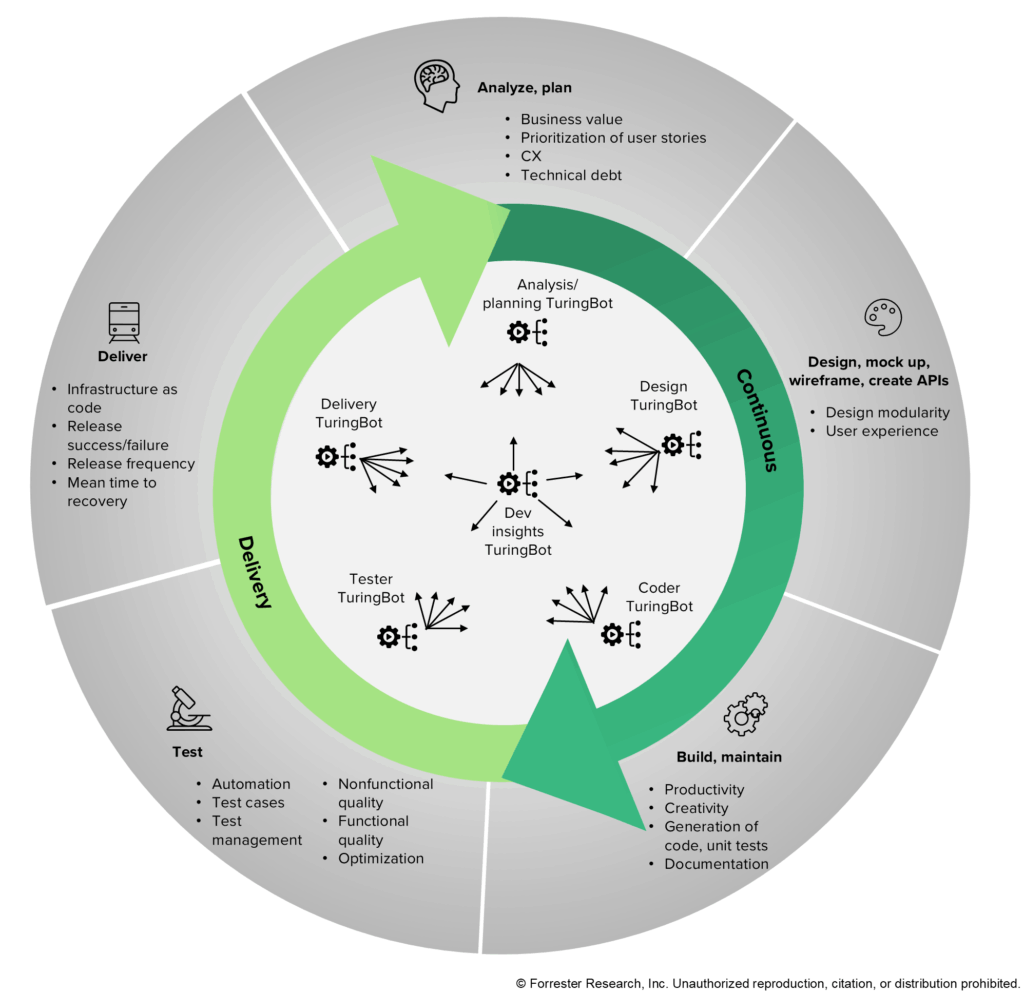

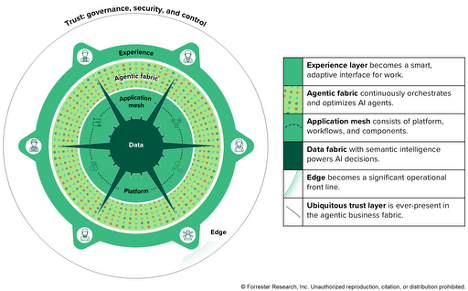

You’ve heard the stories. “More than a quarter of all new code at Google is generated by AI,” boasts Sundar Pichai. “Twenty or thirty percent of [Microsoft’s] code … is written by software,” Satya Nadella proclaims. At the same time, companies seem to be freezing hiring — or outright firing — their developers. All of this has led you to a severe case of AI SDLC FOMO. The reality? After analyzing Forrester’s survey data, reviewing hundreds of guidance sessions and inquiries, conducting 17 interviews, and building on the foundation by expert analysts such as Diego Lo Giudice, in no conversation did I find technology leaders say they were looking to fire their developers. Instead, what I found was equal parts interest and ignorance, promise, and trepidation. You Shouldn’t Oversimplify The SDLC There is no doubt that the willingness to leverage AI for software development is high. In Forrester’s Developer Survey, 2025, using AI and genAI is a top objective by developers (right alongside improving software security and using more open source). At the same time, tech leaders told me that they were challenged by notions that the SDLC is considered a single process by their peers. For one, adoption rates for AI-enhanced assistants and agents (what Forrester refers to as TuringBots) vary in different stages of the SDLC. This is largely based on process and tool maturity. Coding is farther ahead in most organizations than, for example, analysis and planning. Further, efficiency increases also vary based on SDLC phase. Intesa Sanpaolo, a leading bank in Europe, saw a 40% increased efficiency gain in test design, but saw 30% in development (including unit testing), and 15% in requirements gathering and analysis. This story — different gains in different areas of the SDLC with different adoption rates (even across different applications at the same company) — was echoed by many others I talked to. The reality: there is no one-size-fits-all approach to applying AI to the SDLC, and we need to stop thinking of it as a single process that can be swallowed up by a single agent. The ROI Conundrum — You Can’t Justify What You Don’t Measure Correctly Many technology leaders that Forrester speaks to are well past the honeymoon stage when it comes to AI investments. Experiments went well (or faltered) — now is the time to scale. However, we continue to get questions in software development that show a lack of maturity. “How many lines of code should be written per day?” is not a valid KPI, whether you’re measuring a real person or an AI agent. Instead, you need to focus on the metrics that matter: progress, such as velocity and rework trends; quality, such as production defects and deployment failures; efficiency, such as throughput and flow; and engagement, such as developer experience. All of these lead to business value. How do we onboard customers faster with new features? What is the impact on our Net Promoter Score℠ from a low-quality change? How do we improve revenue through more efficient integration with core systems? These are baseline questions you need to answer before your implementation of AI tools. Too many people I spoke to are trying to do it after. If you have difficulty calculating these metrics, value stream management solutions can help do the job for you. Getting Past Trust Concerns While there were concerns early on about AI tools in the SDLC accidently leveraging a competitor’s code — or worse, that your IP would be consumed into someone else’s model — these are largely unfounded. We found that adoption rates in highly regulated verticals such as financial services and government are moving nearly as fast as companies that dove into AI headfirst. To speed things up further, some enterprises are leveraging past experiences applying AI to other areas of their company to streamline adoption. They are also addressing IP theft with knowledge and technical solutions: Many of today’s solutions can be trained on your own codebase, and — if you are truly concerned — you can run some solutions on-premises. This can alleviate concerns of your legal and risk management teams and avoid adoption deadlock. Hold On To Your Developers, But You Must Reskill Them Perhaps most tellingly, the people I spoke to saw a future for developers — but a different kind of “developer.” Architectural skills and business domain come to the forefront. (In other words: vibe coding is cool, but vibe engineering is way cooler). As AI improves, technical knowledge about writing code ceases in importance. Instead, multiagent workflows will force developers to become agent orchestrators: They will navigate agentic choruses far more than the individual songs they’re used to singing. We are already starting to see this with more mature organizations. As for entry-level developers? I admit we see challenges here. Some I spoke to felt AI could provide ongoing training to new talent, but most disagreed. Instead, there was a consensus that tribal knowledge was still king, and the best way to share that knowledge is with veteran developers on your team. Essentially, veteran conductors become veteran teachers. Moving Toward The Agentic SDLC I would argue we stand on the precipice of the biggest change to software development since the up-leveling from assembly to higher level languages. Yes, even bigger than the advent of cloud-native development. Entire processes of analyzing, planning, designing, building, testing, and delivering software are being augmented. But that’s the key word: augmented. Until the day comes that we can fully trust AI agents to deliver critical, production-level software across a wide spectrum of business use cases, there will be a need for developers. Coders — who take requirements, write code, and pass their work onto the next phase of the SDLC, will die. Developers — who understand the business impact of their work and reshape the SDLC as they see fit — will thrive. For a great deal more detail, please read my new report “Don’t Fire Your Developers! What AI-Enhanced Software Development Means For Technology