Meta makes its MobileLLM open for researchers, posting full weights

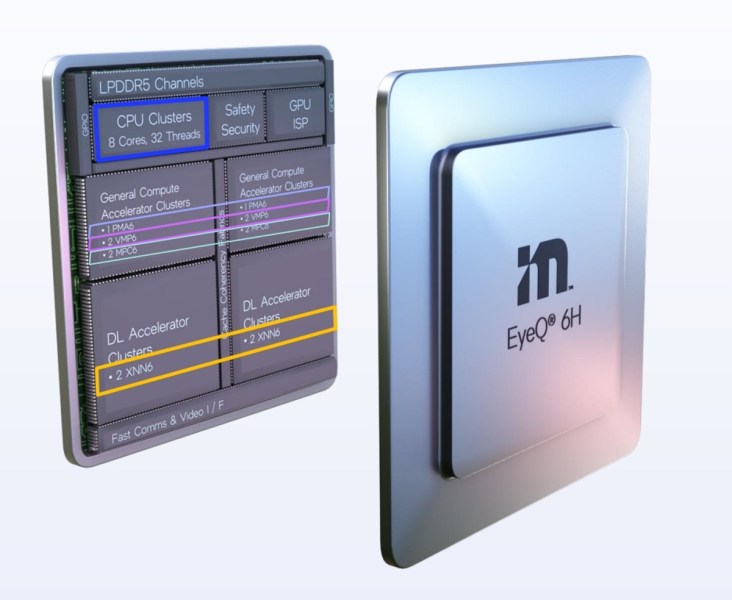

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Meta AI has announced the open-source release of MobileLLM, a set of language models optimized for mobile devices, with model checkpoints and code now accessible on Hugging Face. However, it is presently only available under a Creative Commons 4.0 non-commercial license, meaning enterprises can’t use it on commercial products. Originally described in a research paper published in July 2024 and covered by VentureBeat, MobileLLM is now fully available with open weights, marking a significant milestone for efficient, on-device AI. The release of these open weights makes MobileLLM a more direct, if roundabout, competitor to Apple Intelligence, Apple’s on-device/private cloud hybrid AI solution made up of multiple models, shipping out to users of its iOS 18 operating system in the U.S. and outside the EU this week. However, being restricted to research use and requiring downloading and installation from Hugging Face, it’s likely to remain limited to a computer science and academic audience for now. More efficiency for mobile devices MobileLLM aims to tackle the challenges of deploying AI models on smartphones and other resource-constrained devices. With parameter counts ranging from 125 million to 1 billion, these models are designed to operate within the limited memory and energy capacities typical of mobile hardware. By emphasizing architecture over sheer size, Meta’s research suggests that well-designed compact models can deliver robust AI performance directly on devices. Resolving scaling issues The design philosophy behind MobileLLM deviates from traditional AI scaling laws that emphasize width and large parameter counts. Meta AI’s research instead focuses on deep, thin architectures to maximize performance, improving how abstract concepts are captured by the model. Yann LeCun, Meta’s Chief AI Scientist, highlighted the importance of these depth-focused strategies in enabling advanced AI on everyday hardware. MobileLLM incorporates several innovations aimed at making smaller models more effective: • Depth Over Width: The models employ deep architectures, shown to outperform wider but shallower ones in small-scale scenarios. • Embedding Sharing Techniques: These maximize weight efficiency, crucial for maintaining compact model architecture. • Grouped Query Attention: Inspired by work from Ainslie et al. (2023), this method optimizes attention mechanisms. • Immediate Block-wise Weight Sharing: A novel strategy to reduce latency by minimizing memory movement, helping keep execution efficient on mobile devices. Performance Metrics and Comparisons Despite their compact size, MobileLLM models excel on benchmark tasks. The 125 million and 350 million parameter versions show 2.7% and 4.3% accuracy improvements over previous state-of-the-art (SOTA) models in zero-shot tasks. Remarkably, the 350M version even matches the API calling performance of the much larger Meta Llama-2 7B model. These gains demonstrate that well-architected smaller models can handle complex tasks effectively. Designed for smartphones and the edge MobileLLM’s release aligns with Meta AI’s broader efforts to democratize access to advanced AI technology. With the increasing demand for on-device AI due to cloud costs and privacy concerns, models like MobileLLM are set to play a pivotal role. The models are optimized for devices with memory constraints of 6-12 GB, making them practical for integration into popular smartphones like the iPhone and Google Pixel. Open but non-commercial Meta AI’s decision to open-source MobileLLM reflects the company’s stated commitment to collaboration and transparency. Unfortunately, the licensing terms prohibit commercial usage for now, so only researchers can benefit. By sharing both the model weights and pre-training code, they invite the research community to build on and refine their work. This could accelerate innovation in the field of small language models (SLMs), making high-quality AI accessible without reliance on extensive cloud infrastructure. Developers and researchers interested in testing MobileLLM can now access the models on Hugging Face, fully integrated with the Transformers library. As these compact models evolve, they promise to redefine how advanced AI operates on everyday devices. source

Meta makes its MobileLLM open for researchers, posting full weights Read More »