Claude Code revenue jumps 5.5x as Anthropic launches analytics dashboard

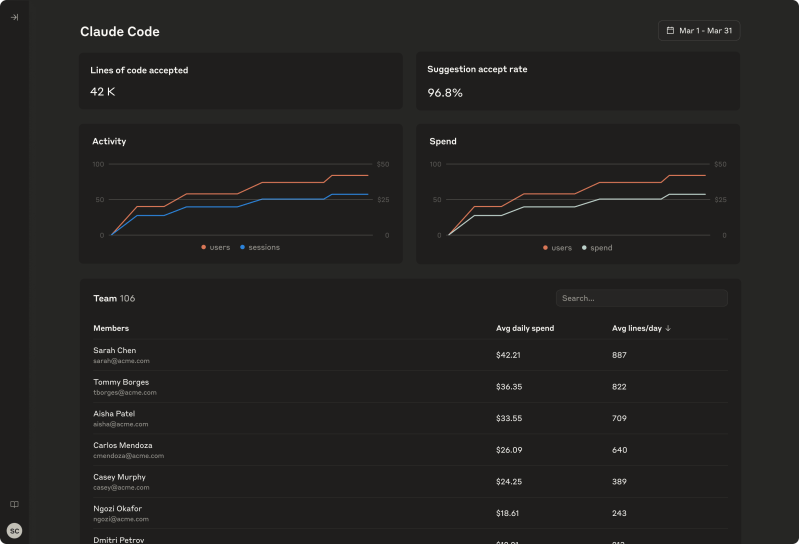

Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now Anthropic announced today it is rolling out a comprehensive analytics dashboard for its Claude Code AI programming assistant, addressing one of the most pressing concerns for enterprise technology leaders: understanding whether their investments in AI coding tools are actually paying off. The new dashboard will provide engineering managers with detailed metrics on how their teams use Claude Code, including lines of code accepted, suggestion accept rates, total user activity over time, total spend over time, average daily spend for each user, and average daily lines of code accepted for each user. The feature comes as companies increasingly demand concrete data to justify their AI spending amid a broader enterprise push to measure artificial intelligence’s return on investment. “When you’re overseeing a big engineering team, you want to know what everyone’s doing, and that can be very difficult,” said Adam Wolff, who manages Anthropic’s Claude Code team and previously served as head of engineering at Robinhood. “It’s hard to measure, and we’ve seen some startups in this space trying to address this, but it’s valuable to gain insights into how people are using the tools that you give them.” The dashboard addresses a fundamental challenge facing technology executives: As AI-powered development tools become standard in software engineering, managers lack visibility into which teams and individuals are benefiting most from these expensive premium tools. Claude Code pricing starts at $17 per month for individual developers, with enterprise plans reaching significantly higher price points. The AI Impact Series Returns to San Francisco – August 5 The next phase of AI is here – are you ready? Join leaders from Block, GSK, and SAP for an exclusive look at how autonomous agents are reshaping enterprise workflows – from real-time decision-making to end-to-end automation. Secure your spot now – space is limited: https://bit.ly/3GuuPLF A screenshot of Anthropic’s new analytics dashboard for Claude Code shows usage metrics, spending data and individual developer activity for a team of engineers over a one-month period. (Credit: Anthropic) Companies demand proof their AI coding investments are working This marks one of Anthropic’s most requested features from enterprise customers, signaling broader enterprise appetite for AI accountability tools. The dashboard will track commits, pull requests, and provide detailed breakdowns of activity by user and cost — data that engineering leaders say is crucial for understanding how AI is changing development workflows. “Different customers actually want to do different things with that cost,” Wolff explained. “Some were like, hey, I want to spend as much as I can on these AI enablement tools because they see it as a multiplier. Some obviously are sensibly looking to make sure that they don’t blow out their spend.” The feature includes role-based access controls, allowing organizations to configure who can view usage data. Wolff emphasized that the system focuses on metadata rather than actual code content, addressing potential privacy concerns about employee surveillance. “This does not contain any of the information about what people are actually doing,” he said. “It’s more the meta of, like, how much are they using it, you know, like, which tools are working? What kind of tool acceptance rate do you see — things that you would use to tweak your overall deployment.” Claude Code revenue jumps 5.5x as developer adoption surges The dashboard launch comes amid extraordinary growth for Claude Code since Anthropic introduced its Claude 4 models in May. The platform has seen active user base growth of 300% and run-rate revenue expansion of more than 5.5 times, according to company data. “Claude Code is on a roll,” Wolff told VentureBeat. “We’ve seen five and a half times revenue growth since we launched the Claude 4 models in May. That gives you a sense of the deluge in demand we’re seeing.” The customer roster includes prominent technology companies like Figma, Rakuten, and Intercom, representing a mix of design tools, e-commerce platforms, and customer service technology providers. Wolff noted that many additional enterprise customers are using Claude Code but haven’t yet granted permission for public disclosure. The growth trajectory reflects broader industry momentum around AI coding assistants. GitHub’s Copilot, Microsoft’s AI-powered programming tool, has amassed millions of users, while newer entrants like Cursor and recently acquired Windsurf have gained traction among developers seeking more powerful AI assistance. Premium pricing strategy targets enterprise customers willing to pay more Claude Code positions itself as a premium enterprise solution in an increasingly crowded market of AI coding tools. Unlike some competitors that focus primarily on code completion, Claude Code offers what Anthropic calls “agentic” capabilities — the ability to understand entire codebases, make coordinated changes across multiple files, and work directly within existing development workflows. “This is not cheap. This is a premium tool,” Wolff said. “The buyer has to understand what they’re getting for it. When you see these metrics, it’s pretty clear that developers are using these tools, and they’re making them more productive.” The company targets organizations with dedicated AI enablement teams and substantial development operations. Wolff said the most tech-forward companies are leading adoption, particularly those with internal teams focused on AI integration. “Certainly companies that have their own AI enablement teams, they love Claude Code because it’s so customizable, it can be deployed with the right set of tools and prompts and permissions that work really well for their organization,” he explained. Traditional industries with large developer teams are showing increasing interest, though adoption timelines remain longer as these organizations navigate procurement processes and deployment strategies. AI coding assistant market heats up as tech giants battle for developers The analytics dashboard puts Anthropic in direct competition with enterprise feedback about measuring AI tool effectiveness—a challenge facing the entire AI coding assistant market. While competitors like GitHub Copilot and newer entrants focus primarily on individual developer productivity, Anthropic is betting that enterprise customers need comprehensive organizational insights. Amazon recently launched Kiro, its

Claude Code revenue jumps 5.5x as Anthropic launches analytics dashboard Read More »