White House plan signals “open-weight first” era—and enterprises need new guardrails

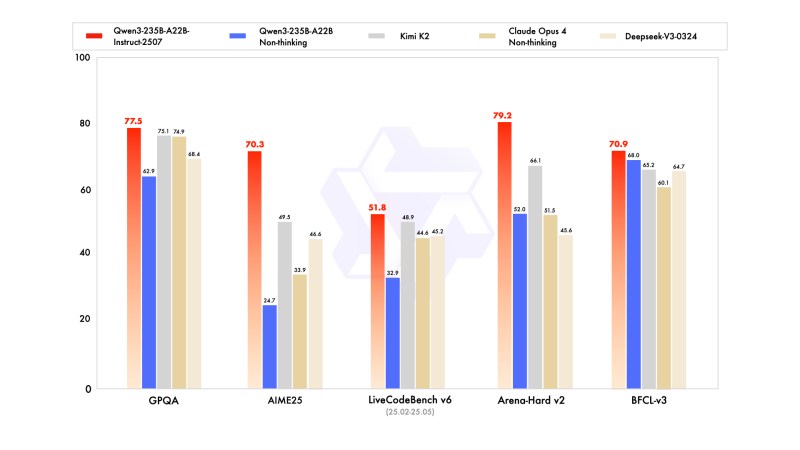

Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now U.S. President Donald Trump signed the AI Action Plan, which outlines a path for the U.S. to lead in the AI race. For enterprises already in the throes of deploying AI systems, the rules represent a clear indication of how this administration intends to treat AI going forward and could signal how providers will approach AI development. Much like the AI executive order signed by Joe Biden in 2023, Trump’s order primarily concerns government offices, directing how they can contract with AI models and application providers, as it is not a legislative act. The AI plan may not directly affect enterprises immediately, but analysts noted that anytime the government takes a position on AI, the ecosystem changes. “This plan will likely shape the ecosystem we all operate in — one that rewards those who can move fast, stay aligned and deliver real-world outcomes,” Matt Wood, commercial technology and innovation officer at PwC, told VentureBeat in an email. “For enterprises, the signal is clear: the pace of AI adoption is accelerating, and the cost of lagging is going up. Even if the plan centers on federal agencies, the ripple effects — in procurement, infrastructure, and norms — will reach much further. We’ll likely see new government-backed testbeds, procurement programs, and funding streams emerge — and enterprises that can partner, pilot, or productize in this environment will be well-positioned.” The AI Impact Series Returns to San Francisco – August 5 The next phase of AI is here – are you ready? Join leaders from Block, GSK, and SAP for an exclusive look at how autonomous agents are reshaping enterprise workflows – from real-time decision-making to end-to-end automation. Secure your spot now – space is limited: https://bit.ly/3GuuPLF He added that the Action Plan “is not a blueprint for enterprise AI.” Still, enterprises should expect an AI development environment that prioritizes speed, scale, experimentation and less reliance on regulatory shelters. Companies working with the government should also be prepared for additional scrutiny on the models and applications they use, to ensure alignment with the government’s values. The Action Plan outlines how government agencies can collaborate with AI companies, prioritize recommended tasks to invest in infrastructure and encourage AI development and establish guidelines for exporting and importing AI tools. Charleyne Biondi, assistant vice president and analyst at Moody’s Ratings, said the plan “highlights AI’s role as an increasingly strategic asset and core driver of economic transformation.” She noted, however, that that plan doesn’t address regulatory fragmentation. “However, current regulatory fragmentation across U.S. states could create uncertainty for developers and businesses. Striking the right balance between innovation and safety and between national ambition and regulatory clarity will be critical to ensure continued enterprise adoption and avoid unintended slowdowns,” she said. What is inside the action plan The AI Action Plan is broken down into three pillars: Accelerating AI innovation Building American AI infrastructure Leading in international AI diplomacy and security. The key headline piece of the AI Action Plan centers on “ensuring free speech and American values,” a significant talking point for this administration. It instructs the National Institute of Standards and Technology (NIST) to remove references to misinformation and diversity, equity and inclusion. It prevents agencies from working with foundation models that have “top-down agendas.” It’s unclear how the government expects existing models and datasets to follow suit, or what this kind of AI would look like. Enterprises are especially concerned about potentially controversial statements AI systems can make, as evidenced by the recent Grok kerfuffle. It also orders NIST to research and publish findings to ensure that models from China, such as DeepSeek, Qwen and Kimi, are not aligned with the Chinese Communist Party. However, the most consequential positions involve supporting open-source systems, creating a new testing and evaluation ecosystem, and streamlining the process for building data centers. Through the plan, the Department of Energy and the National Science Foundation are directed to develop “AI testbeds for piloting AI systems in secure, real-world settings,” allowing researchers to prototype systems. It also removes much of the red tape associated with evaluating safety testing for models. What has excited many in the industry is the explicit support for open-source AI and open-weight models. “We need to ensure America has leading open models founded on American values. Open-source and open-weight models could become global standards in some areas of business and academic research worldwide. For that reason, they also have geostrategic value. While the decision of whether and how to release an open or closed model is fundamentally up to the developer, the Federal government should create a supportive environment for open models,” the plan said. Understandably, open-source proponents like Hugging Face’s Clement Delangue praised this decision on social media, saying: “It’s time for the American AI community to wake up, drop the “open is not safe” bullshit, and return to its roots: open science and open-source AI, powered by an unmatched community of frontier labs, big tech, startups, universities, and non‑profits.” It’s time for the American AI community to wake up, drop the “open is not safe” bullshit, and return to its roots: open science and open-source AI, powered by an unmatched community of frontier labs, big tech, startups, universities, and non‑profits. If we don’t, we’ll be forced… https://t.co/NxnhdMhUgH — clem ? (@ClementDelangue) July 23, 2025 BCG X North America chair Sesh Iyer told VentureBeat this would give enterprises more confidence in adopting open-source LLMs and could also encourage more closed-source providers “to rethink proprietary strategies and potentially consider releasing model weights.” The plan does mention that cloud providers should prioritize the Department of Defense, which could bump some enterprises down an already crowded waiting list. A little more clarity on rules The AI Action Plan is more akin to an executive order and can only direct government agencies under the purview of the Executive branch. Full AI regulation,

White House plan signals “open-weight first” era—and enterprises need new guardrails Read More »