5 key questions your developers should be asking about MCP

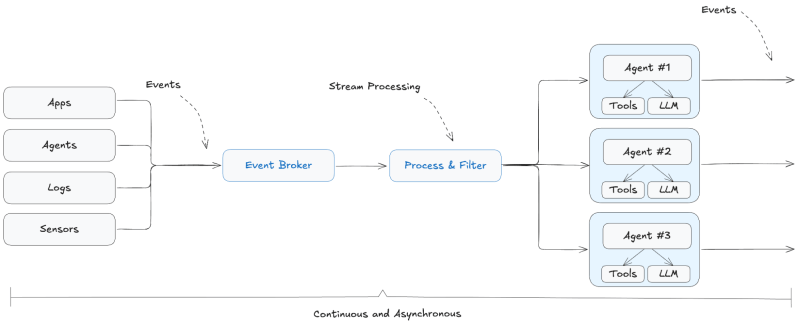

Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now The Model Context Protocol (MCP) has become one of the most talked-about developments in AI integration since its introduction by Anthropic in late 2024. If you’re tuned into the AI space at all, you’ve likely been inundated with developer “hot takes” on the topic. Some think it’s the best thing ever; others are quick to point out its shortcomings. In reality, there’s some truth to both. One pattern I’ve noticed with MCP adoption is that skepticism typically gives way to recognition: This protocol solves genuine architectural problems that other approaches don’t. I’ve gathered a list of questions below that reflect the conversations I’ve had with fellow builders who are considering bringing MCP to production environments. 1. Why should I use MCP over other alternatives? Of course, most developers considering MCP are already familiar with implementations like OpenAI’s custom GPTs, vanilla function calling, Responses API with function calling, and hardcoded connections to services like Google Drive. The question isn’t really whether MCP fully replaces these approaches — under the hood, you could absolutely use the Responses API with function calling that still connects to MCP. What matters here is the resulting stack. Despite all the hype about MCP, here’s the straight truth: It’s not a massive technical leap. MCP essentially “wraps” existing APIs in a way that’s understandable to large language models (LLMs). Sure, a lot of services already have an OpenAPI spec that models can use. For small or personal projects, the objection that MCP “isn’t that big a deal” is pretty fair. The AI Impact Series Returns to San Francisco – August 5 The next phase of AI is here – are you ready? Join leaders from Block, GSK, and SAP for an exclusive look at how autonomous agents are reshaping enterprise workflows – from real-time decision-making to end-to-end automation. Secure your spot now – space is limited: https://bit.ly/3GuuPLF The practical benefit becomes obvious when you’re building something like an analysis tool that needs to connect to data sources across multiple ecosystems. Without MCP, you’re required to write custom integrations for each data source and each LLM you want to support. With MCP, you implement the data source connections once, and any compatible AI client can use them. 2. Local vs. remote MCP deployment: What are the actual trade-offs in production? This is where you really start to see the gap between reference servers and reality. Local MCP deployment using the stdio programming language is dead simple to get running: Spawn subprocesses for each MCP server and let them talk through stdin/stdout. Great for a technical audience, difficult for everyday users. Remote deployment obviously addresses the scaling but opens up a can of worms around transport complexity. The original HTTP+SSE approach was replaced by a March 2025 streamable HTTP update, which tries to reduce complexity by putting everything through a single /messages endpoint. Even so, this isn’t really needed for most companies that are likely to build MCP servers. But here’s the thing: A few months later, support is spotty at best. Some clients still expect the old HTTP+SSE setup, while others work with the new approach — so, if you’re deploying today, you’re probably going to support both. Protocol detection and dual transport support are a must. Authorization is another variable you’ll need to consider with remote deployments. The OAuth 2.1 integration requires mapping tokens between external identity providers and MCP sessions. While this adds complexity, it’s manageable with proper planning. 3. How can I be sure my MCP server is secure? This is probably the biggest gap between the MCP hype and what you actually need to tackle for production. Most showcases or examples you’ll see use local connections with no authentication at all, or they handwave the security by saying “it uses OAuth.” The MCP authorization spec does leverage OAuth 2.1, which is a proven open standard. But there’s always going to be some variability in implementation. For production deployments, focus on the fundamentals: Proper scope-based access control that matches your actual tool boundaries Direct (local) token validation Audit logs and monitoring for tool use However, the biggest security consideration with MCP is around tool execution itself. Many tools need (or think they need) broad permissions to be useful, which means sweeping scope design (like a blanket “read” or “write”) is inevitable. Even without a heavy-handed approach, your MCP server may access sensitive data or perform privileged operations — so, when in doubt, stick to the best practices recommended in the latest MCP auth draft spec. 4. Is MCP worth investing resources and time into, and will it be around for the long term? This gets to the heart of any adoption decision: Why should I bother with a flavor-of-the-quarter protocol when everything AI is moving so fast? What guarantee do you have that MCP will be a solid choice (or even around) in a year, or even six months? Well, look at MCP’s adoption by major players: Google supports it with its Agent2Agent protocol, Microsoft has integrated MCP with Copilot Studio and is even adding built-in MCP features for Windows 11, and Cloudflare is more than happy to help you fire up your first MCP server on their platform. Similarly, the ecosystem growth is encouraging, with hundreds of community-built MCP servers and official integrations from well-known platforms. In short, the learning curve isn’t terrible, and the implementation burden is manageable for most teams or solo devs. It does what it says on the tin. So, why would I be cautious about buying into the hype? MCP is fundamentally designed for current-gen AI systems, meaning it assumes you have a human supervising a single-agent interaction. Multi-agent and autonomous tasking are two areas MCP doesn’t really address; in fairness, it doesn’t really need to. But if you’re looking for an evergreen yet still somehow bleeding-edge approach, MCP isn’t it. It’s

5 key questions your developers should be asking about MCP Read More »