MIT report misunderstood: Shadow AI economy booms while headlines cry failure

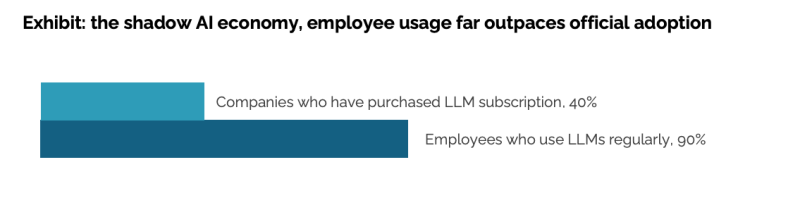

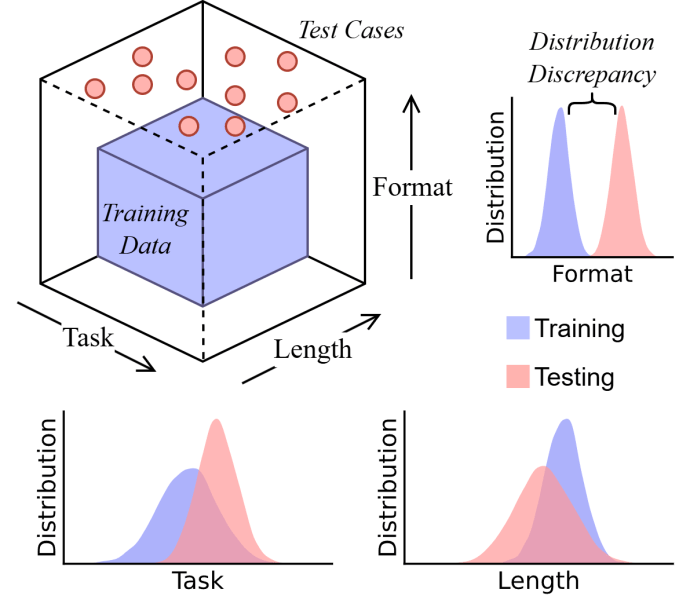

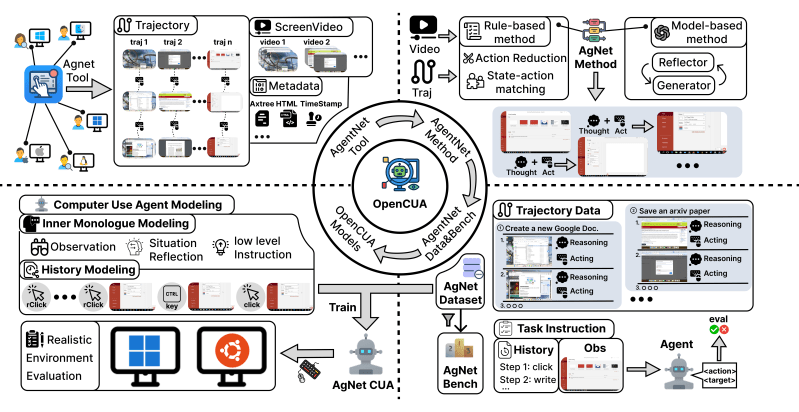

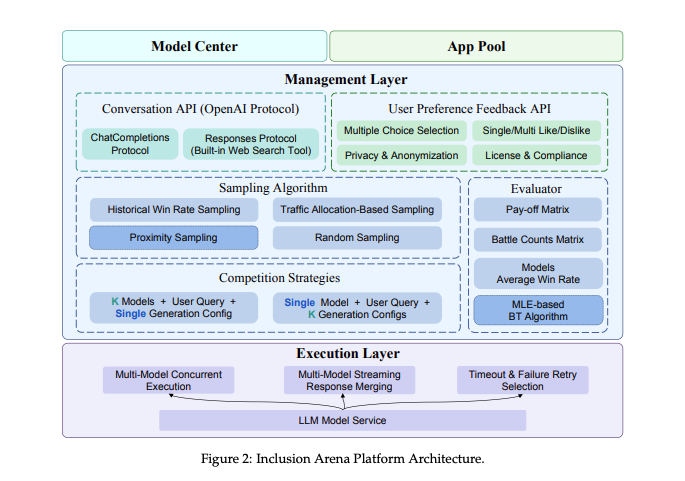

Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now The most widely cited statistic from a new MIT report has been deeply misunderstood. While headlines trumpet that “95% of generative AI pilots at companies are failing,” the report actually reveals something far more remarkable: the fastest and most successful enterprise technology adoption in corporate history is happening right under executives’ noses. The study, released this week by MIT’s Project NANDA, has sparked anxiety across social media and business circles, with many interpreting it as evidence that artificial intelligence is failing to deliver on its promises. But a closer reading of the 26-page report tells a starkly different story — one of unprecedented grassroots technology adoption that has quietly revolutionized work while corporate initiatives stumble. The researchers found that 90% of employees regularly use personal AI tools for work, even though only 40% of their companies have official AI subscriptions. “While only 40% of companies say they purchased an official LLM subscription, workers from over 90% of the companies we surveyed reported regular use of personal AI tools for work tasks,” the study explains. “In fact, almost every single person used an LLM in some form for their work.” Employees use personal A.I. tools at more than twice the rate of official corporate adoption, according to the MIT report. (Credit: MIT) How employees cracked the AI code while executives stumbled The MIT researchers discovered what they call a “shadow AI economy” where workers use personal ChatGPT accounts, Claude subscriptions and other consumer tools to handle significant portions of their jobs. These employees aren’t just experimenting — they’re using AI “multiples times a day every day of their weekly workload,” the study found. AI Scaling Hits Its Limits Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are: Secure your spot to stay ahead: https://bit.ly/4mwGngO This underground adoption has outpaced the early spread of email, smartphones, and cloud computing in corporate environments. A corporate lawyer quoted in the MIT report exemplified the pattern: Her organization invested $50,000 in a specialized AI contract analysis tool, yet she consistently used ChatGPT for drafting work because “the fundamental quality difference is noticeable. ChatGPT consistently produces better outputs, even though our vendor claims to use the same underlying technology.” The pattern repeats across industries. Corporate systems get described as “brittle, overengineered, or misaligned with actual workflows,” while consumer AI tools win praise for “flexibility, familiarity, and immediate utility.” As one chief information officer told researchers: “We’ve seen dozens of demos this year. Maybe one or two are genuinely useful. The rest are wrappers or science projects.” The 95% failure rate that has dominated headlines applies specifically to custom enterprise AI solutions — the expensive, bespoke systems companies commission from vendors or build internally. These tools fail because they lack what the MIT researchers call “learning capability.” Most corporate AI systems “do not retain feedback, adapt to context, or improve over time,” the study found. Users complained that enterprise tools “don’t learn from our feedback” and require “too much manual context required each time.” Consumer tools like ChatGPT succeed because they feel responsive and flexible, even though they reset with each conversation. Enterprise tools feel rigid and static, requiring extensive setup for each use. The learning gap creates a strange hierarchy in user preferences. For quick tasks like emails and basic analysis, 70% of workers prefer AI over human colleagues. But for complex, high-stakes work, 90% still want humans. The dividing line isn’t intelligence — it’s memory and adaptability. General-purpose A.I. tools like ChatGPT reach production 40% of the time, while task-specific enterprise tools succeed only 5% of the time. (Credit: MIT) The hidden billion-dollar productivity boom happening under IT’s radar Far from showing AI failure, the shadow economy reveals massive productivity gains that don’t appear in corporate metrics. Workers have solved integration challenges that stymie official initiatives, proving AI works when implemented correctly. “This shadow economy demonstrates that individuals can successfully cross the GenAI Divide when given access to flexible, responsive tools,” the report explains. Some companies have started paying attention: “Forward-thinking organizations are beginning to bridge this gap by learning from shadow usage and analyzing which personal tools deliver value before procuring enterprise alternatives.” The productivity gains are real and measurable, just hidden from traditional corporate accounting. Workers automate routine tasks, accelerate research, and streamline communication — all while their companies’ official AI budgets produce little return. Workers prefer A.I. for routine tasks like emails but still trust humans for complex, multi-week projects. (Credit: MIT) Why buying beats building: external partnerships succeed twice as often Another finding challenges conventional tech wisdom: companies should stop trying to build AI internally. External partnerships with AI vendors reached deployment 67% of the time, compared to 33% for internally built tools. The most successful implementations came from organizations that “treated AI startups less like software vendors and more like business service providers,” holding them to operational outcomes rather than technical benchmarks. These companies demanded deep customization and continuous improvement rather than flashy demos. “Despite conventional wisdom that enterprises resist training AI systems, most teams in our interviews expressed willingness to do so, provided the benefits were clear and guardrails were in place,” the researchers found. The key was partnership, not just purchasing. Seven industries avoiding disruption are actually being smart The MIT report found that only technology and media sectors show meaningful structural change from AI, while seven major industries — including healthcare, finance, and manufacturing — show “significant pilot activity but little to no structural change.” This measured approach isn’t a failure — it’s wisdom. Industries avoiding disruption are being thoughtful about implementation rather than rushing into chaotic change. In healthcare and energy, “most executives report no current or anticipated hiring reductions over the next five years.” Technology and media move faster because they can absorb more risk.

MIT report misunderstood: Shadow AI economy booms while headlines cry failure Read More »