OpenAI, Microsoft tell Senate ‘no one country can win AI’

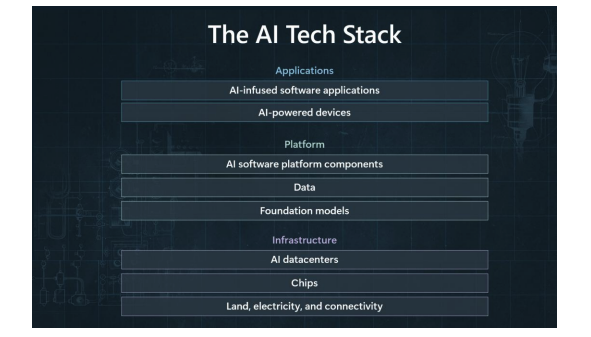

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More The Trump administration walked back an Executive Order from former President Joe Biden that created rules around the development and deployment of AI. Since then, the government has stepped back from regulating the technology. In a more than three-hour hearing at the Senate Committee on Commerce, Science and Transportation, executives like OpenAI CEO Sam Altman, AMD CEO Lisa Su, Coreweave co-founder and CEO Michael Intrator and Microsoft Vice Chair and President Brad Smith urged policymakers to ease the process of building infrastructure around AI development. The executives told policymakers that speeding up permitting could make building new data centers, power plants to energize data centers and even chip fabricators crucial in shoring up the AI Tech Stack and keeping the country competitive against China. They also spoke about the need for more skilled workers like electricians, easing software talent immigration and encouraging “AI diffusion” or the adoption of generative AI models in the U.S. and worldwide. Altman, fresh from visiting the company’s $500 billion Stargate project in Texas, told senators that the U.S. is leading the charge in AI, but it needs more infrastructure like power plants to fuel its next phase. “I believe the next decade will be about abundant intelligence and abundant energy. Making sure that America leads in both of those, that we are able to usher in these dual revolutions that will change the world we live in incredibly positive ways is critical,” Altman said. The hearing came as the Trump administration is determining how much influence the government will have in the AI space. Sen. Ted Cruz of Texas, chair of the committee, said he proposed creating an AI regulatory sandbox. Microsoft’s Smith said in his written testimony that American AI companies need to continue innovating because ” it is a race that no company or country can win by itself.” Supporting the AI tech stack Microsoft’s Smith laid out the AI Tech Stack, which he said shows how important each segment of the sector is to innovation. “We’re all in this together. If the United States is gonna succeed in leading the world in AI, it requires infrastructure, it requires success at the platform level, it requires people who create applications,” Smith said. He added, “Innovation will go faster with more infrastructure, faster permitting and more electricians.” AMD’s Su reiterated that “maintaining our lead actually requires excellence at every layer of the stack.” “I think open ecosystems are really a cornerstone of U.S. leadership, and that allows ideas to come from everywhere and every part of the innovation sector,” Su said. “It’s reducing barriers to entry and strengthening security as well as creating a competitive marketplace for ideas.” With AI models needing more and more GPUs for training, the need to improve the production of chips, build more data centers, and find ways to power them has become even more critical. The Chips and Science Act, a Biden-era law, was meant to jumpstart semiconductor production in the U.S., but making the needed chips to power the world’s most powerful models locally is proving to be slow and expensive. In recent months, companies like Cerebras have announced plans to build more data centers to help process model training and inference. A break from current policies The Senate majority of Republican policymakers made it clear during the hearing that the Trump administration would prefer not to regulate AI development, preferring a more market-driven, hands-off approach. This administration has also pushed for more U.S.-focused growth, demanding businesses use American products and create more American jobs. However, the executives noted that for American AI to remain competitive, companies need access to international talent and, more importantly, clear export policies so models made in the U.S. can be attractive to other countries. “We need faster adoption, what people refer to as AI diffusion. The ability to put AI to work across every part of the American economy to boost productivity, to boost economic growth, to enable people to innovate in their work,” Smith said. “If America is gonna lead the world, we need to connect with the world. Our global leadership relies on our ability to serve the world with the right approach and on our ability to sustain the trust of the rest of the world.” He added that removing quantitative caps for tier two countries is essential because these policies “sent a message to 120 nations that couldn’t count on us to provide the AI they want and need.” Altman noted, “There will be great chips and models trained around the world,” reiterating American companies’ leading position in the space. There’s some good news in the area of AI diffusion because while the hearing was ongoing, the Commerce Department announced it was modifying rules from the Biden administration that limited which countries could receive chips made by American companies. The rule was set to take effect on May 15. While the executives said government standards would be helpful, they decried any move to “pre-approve” model releases, similar to the EU. Open ecosystem Generative AI occupies a liminal space in tech regulation. On the one hand, the comparative lack of rules has allowed companies like OpenAI to develop technology without much fear of repercussions. On the other hand, AI, like the internet and social media before it, touches people’s lives professionally and personally. In some ways, the executives veered away from how the Trump administration has positioned U.S. growth. The hearing showed that while AI companies want support from the government to speed up the process of expanding the AI infrastructure, they also need to be more open to the rest of the world. It requires talent from abroad. It needs to sell products and platforms to other countries. Social media commentary varied, with some pointing out that executives, in particular Altman, had different opinions on regulation before. 2023 Sam Altman: Tells Congress a new agency should be

OpenAI, Microsoft tell Senate ‘no one country can win AI’ Read More »