The great cognitive migration: How AI is reshaping human purpose, work and meaning

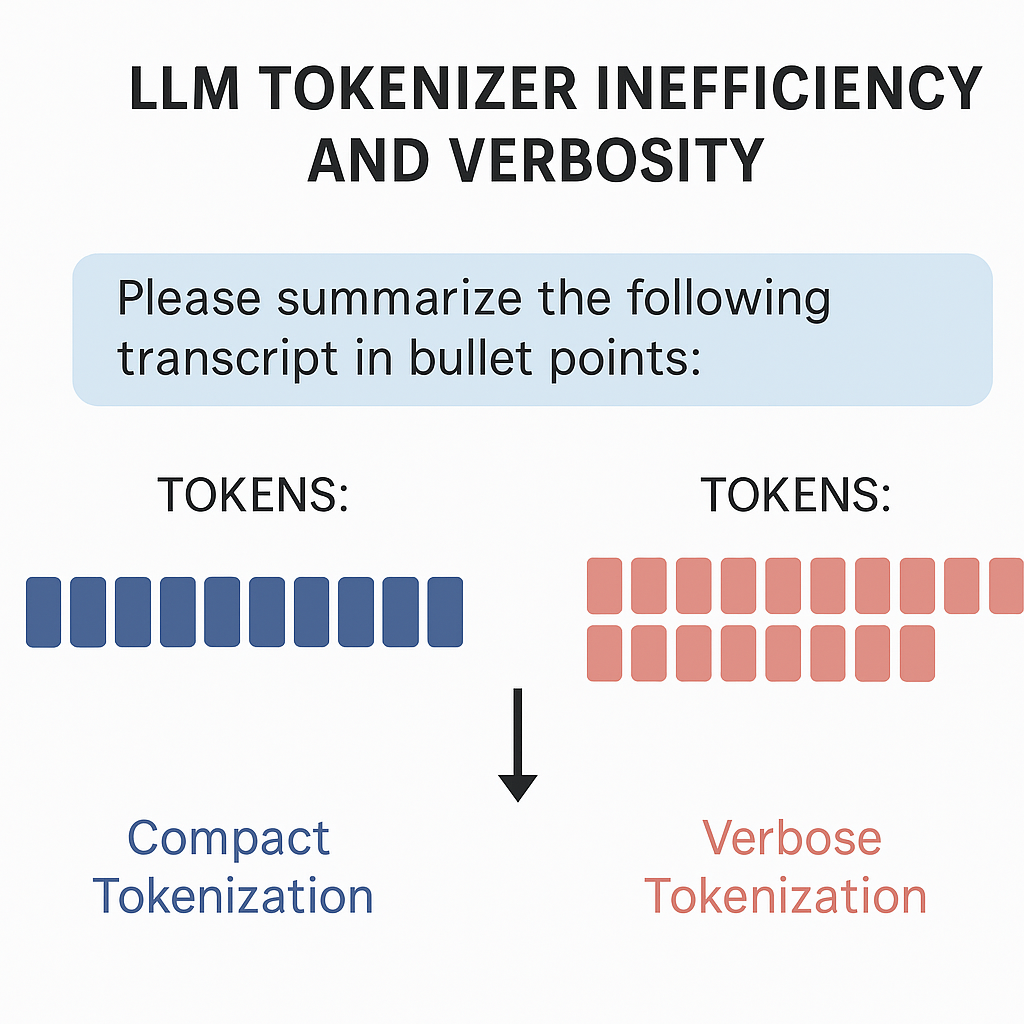

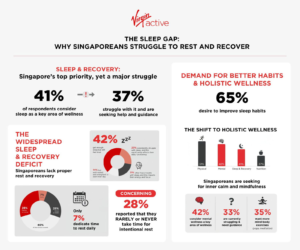

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Humans have always migrated to survive. When glaciers advanced, when rivers dried up, when cities fell, people moved. Their journeys were often painful, but necessary, whether across deserts, mountains or oceans. Today, we are entering a new kind of migration — not across geography but across cognition. AI is reshaping the cognitive landscape faster than any technology before it. In the last two years, large language models (LLMs) have achieved PhD-level performance across many domains. It is reshaping our mental map much like an earthquake can upset the physical landscape. The rapidity of this change has led to a seemingly watchful inaction: We know a migration is coming soon, but we are unable to imagine exactly how or when it will unfold. But, make no mistake, the early stage of a staggering transformation is underway. Tasks once reserved for educated professionals (including authoring essays, composing music, drafting legal contracts and diagnosing illnesses), are now performed by machines at breathtaking speed. Not only that, but the latest AI systems can make fine-grained inferences and connections long thought to require unique human insight, further accelerating the need for migration. For example, in a New Yorker essay, Princeton history of science professor Graham Burnett marveled at how Google’s NotebookLM made an unexpected and illuminating link between theories from Enlightenment philosophy and a modern TV advertisement. As AI grows more capable, humans will need to embrace new domains of meaning and value in areas where machines still falter, and where human creativity, ethical reasoning, emotional resonance and the weaving of generational meaning remain indispensable. This “cognitive migration” will define the future of work, education and culture, and those who recognize and prepare for it will shape the next chapter of human history. Where machines advance, humans must move Like climate migrants who must leave their familiar surroundings due to rising tides or growing heat, cognitive migrants will need to find new terrain where their contributions can have value. But where and how exactly will we do this? Moravec’s Paradox provides some insight. This phenomenon is named for Austrian scientist Hans Moravec, who observed in the 1980s that tasks humans find difficult are easy for a computer, and vice-versa. Or, as computer scientist and futurist Kai-Fu Lee has said: “Let us choose to let machines be machines, and let humans be humans.” Moravec’s insight provides us with an important clue. People excel at tasks that are intuitive, emotional and deeply tied to embodied experience, areas where machines still falter. Successfully navigating through a crowded street, recognizing sarcasm in conversation and intuiting that a painting feels melancholy are all feats of perception and judgment that millions of years of evolution have etched deep into human nature. In contrast, machines that can ace a logic puzzle or summarize a thousand-page novel often stumble at tasks we consider second nature. The human domains AI cannot yet reach As AI rapidly advances, the safe terrain for human endeavor will migrate toward creativity, ethical reasoning, emotional connection and the weaving of deep meaning. The work of humans in the not-too-distant future will increasingly demand uniquely human strengths, including the cultivation of insight, imagination, empathy and moral wisdom. Like climate migrants seeking new fertile ground, cognitive migrants must chart a course toward these distinctly human domains, even as the old landscapes of labor and learning shift under our feet. Not every job will be swept away by AI. Unlike geographical migrations which might have clearer starting points, cognitive migration will unfold gradually at first, and unevenly across different sectors and regions. The diffusion of AI technologies and its impact may take a decade or two. Many roles that rely on human presence, intuition and relationship-building may be less affected, at least in the near term. These roles include a range of skilled professions from nurses to electricians and frontline service workers. These roles often require nuanced judgment, embodied awareness and trust, which are human attributes for which machines are not always suited. Cognitive migration, then, will not be universal. But the broader shift in how we assign value and purpose to human work will still ripple outward. Even those whose tasks remain stable may find their work and meaning reshaped by a world in flux. Some promote the idea that AI will unlock a world of abundance where work becomes optional, creativity flourishes and society thrives on digital productivity. Perhaps that future will come. But we cannot ignore the monumental transition it will require. Jobs will change faster than many people can realistically adapt. Institutions, built for stability, will inevitably lag. Purpose will erode before it is reimagined. If abundance is the promised land, then cognitive migration is the required, if uncertain, journey to reach it. The uneven road ahead Just as in climate migration, not everyone will move easily or equally. Our schools are still training students for a world that is vanishing, not the one that is emerging. Many organizations cling to efficiency metrics that reward repeatable output, the very thing AI can now outperform us on. And far too many individuals will be left wondering where their sense of purpose fits in a world where machines can do what they once proudly did. Human purpose and meaning are likely to undergo significant upheaval. For centuries, we have defined ourselves by our ability to think, reason and create. Now, as machines take on more of those functions, the questions of our place and value become unavoidable. If AI-driven job losses occur on a large scale without a commensurate ability for people to find new forms of meaningful work, the psychological and social consequences could be profound. It is possible that some cognitive migrants could slip into despair. AI scientist Geoffrey Hinton, who won the 2024 Nobel Prize in physics for his groundbreaking work on deep learning neural networks that underpin LLMs, has warned in recent years about the potential

The great cognitive migration: How AI is reshaping human purpose, work and meaning Read More »