The new AI calculus: Google’s 80% cost edge vs. OpenAI’s ecosystem

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More The relentless pace of generative AI innovation shows no signs of slowing. In just the past couple of weeks, OpenAI dropped its powerful o3 and o4-mini reasoning models alongside the GPT-4.1 series, while Google countered with Gemini 2.5 Flash, rapidly iterating on its flagship Gemini 2.5 Pro released shortly before. For enterprise technical leaders navigating this dizzying landscape, choosing the right AI platform requires looking far beyond rapidly shifting model benchmarks While model-versus-model benchmarks grab headlines, the decision for technical leaders goes far deeper. Choosing an AI platform is a commitment to an ecosystem, impacting everything from core compute costs and agent development strategy to model reliability and enterprise integration. But perhaps the most stark differentiator, bubbling beneath the surface but with profound long-term implications, lies in the economics of the hardware powering these AI giants. Google wields a massive cost advantage thanks to its custom silicon, potentially running its AI workloads at a fraction of the cost OpenAI incurs relying on Nvidia’s market-dominant (and high-margin) GPUs. This analysis delves beyond the benchmarks to compare the Google and OpenAI/Microsoft AI ecosystems across the critical factors enterprises must consider today: the significant disparity in compute economics, diverging strategies for building AI agents, the crucial trade-offs in model capabilities and reliability and the realities of enterprise fit and distribution. The analysis builds upon an in-depth video discussion exploring these systemic shifts between myself and AI developer Sam Witteveen earlier this week. 1. Compute economics: Google’s TPU “secret weapon” vs. OpenAI’s Nvidia tax The most significant, yet often under-discussed, advantage Google holds is its “secret weapon:” its decade-long investment in custom Tensor Processing Units (TPUs). OpenAI and the broader market rely heavily on Nvidia’s powerful but expensive GPUs (like the H100 and A100). Google, on the other hand, designs and deploys its own TPUs, like the recently unveiled Ironwood generation, for its core AI workloads. This includes training and serving Gemini models. Why does this matter? It makes a huge cost difference. Nvidia GPUs command staggering gross margins, estimated by analysts to be in the 80% range for data center chips like the H100 and upcoming B100 GPUs. This means OpenAI (via Microsoft Azure) pays a hefty premium — the “Nvidia tax” — for its compute power. Google, by manufacturing TPUs in-house, effectively bypasses this markup. While manufacturing GPUs might cost Nvidia $3,000-$5,000, hyperscalers like Microsoft (supplying OpenAI) pay $20,000-$35,000+ per unit in volume, according to reports. Industry conversations and analysis suggest that Google may be obtaining its AI compute power at roughly 20% of the cost incurred by those purchasing high-end Nvidia GPUs. While the exact numbers are internal, the implication is a 4x-6x cost efficiency advantage per unit of compute for Google at the hardware level. This structural advantage is reflected in API pricing. Comparing the flagship models, OpenAI’s o3 is roughly 8 times more expensive for input tokens and 4 times more expensive for output tokens than Google’s Gemini 2.5 Pro (for standard context lengths). This cost differential isn’t academic; it has profound strategic implications. Google can likely sustain lower prices and offer better “intelligence per dollar,” giving enterprises more predictable long-term Total Cost of Ownership (TCO) – and that’s exactly what it is doing right now in practice. OpenAI’s costs, meanwhile, are intrinsically tied to Nvidia’s pricing power and the terms of its Azure deal. Indeed, compute costs represent an estimated 55-60% of OpenAI’s total $9B operating expenses in 2024, according to some reports, and are projected to exceed 80% in 2025 as they scale. While OpenAI’s projected revenue growth is astronomical – potentially hitting $125 billion by 2029 according to reported internal forecasts – managing this compute spend remains a critical challenge, driving their pursuit of custom silicon. 2. Agent frameworks: Google’s open ecosystem approach vs. OpenAI’s integrated one Beyond hardware, the two giants are pursuing divergent strategies for building and deploying the AI agents poised to automate enterprise workflows. Google is making a clear push for interoperability and a more open ecosystem. At Cloud Next two weeks ago, it unveiled the Agent-to-Agent (A2A) protocol, designed to allow agents built on different platforms to communicate, alongside its Agent Development Kit (ADK) and the Agentspace hub for discovering and managing agents. While A2A adoption faces hurdles — key players like Anthropic haven’t signed on (VentureBeat reached out to Anthropic about this, but Anthropic declined to comment) — and some developers debate its necessity alongside Anthropic’s existing Model Context Protocol (MCP). Google’s intent is clear: to foster a multi-vendor agent marketplace, potentially hosted within its Agent Garden or via a rumored Agent App Store. OpenAI, conversely, appears focused on creating powerful, tool-using agents tightly integrated within its own stack. The new o3 model exemplifies this, capable of making hundreds of tool calls within a single reasoning chain. Developers leverage the Responses API and Agents SDK, along with tools like the new Codex CLI, to build sophisticated agents that operate within the OpenAI/Azure trust boundary. While frameworks like Microsoft’s Autogen offer some flexibility, OpenAI’s core strategy seems less about cross-platform communication and more about maximizing agent capabilities vertically within its controlled environment. The enterprise takeaway: Companies prioritizing flexibility and the ability to mix-and-match agents from various vendors (e.g., plugging a Salesforce agent into Vertex AI) may find Google’s open approach appealing. Those deeply invested in the Azure/Microsoft ecosystem or preferring a more vertically managed, high-performance agent stack might lean towards OpenAI. 3. Model capabilities: parity, performance, and pain points The relentless release cycle means model leadership is fleeting. While OpenAI’s o3 currently edges out Gemini 2.5 Pro on some coding benchmarks like SWE-Bench Verified and Aider, Gemini 2.5 Pro matches or leads on others like GPQA and AIME. Gemini 2.5 Pro is also the overall leader on the large language model (LLM) Arena Leaderboard. For many enterprise use cases, however, the models have reached rough parity in core capabilities. The real difference lies in

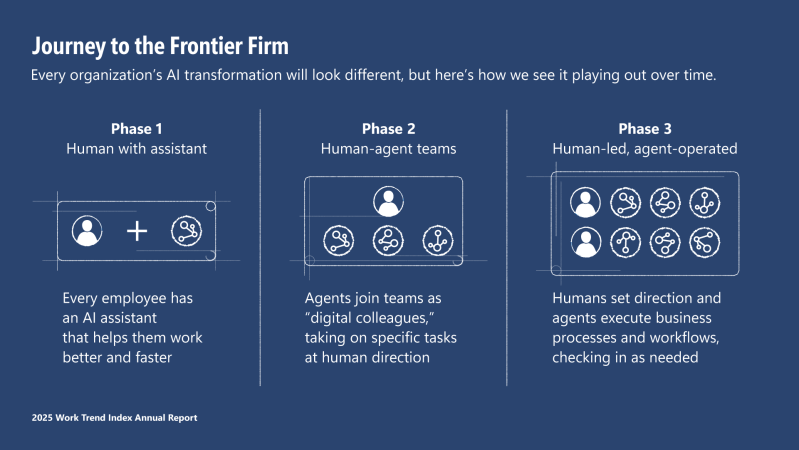

The new AI calculus: Google’s 80% cost edge vs. OpenAI’s ecosystem Read More »