Windsurf: OpenAI’s potential $3B bet to drive the ‘vibe coding’ movement

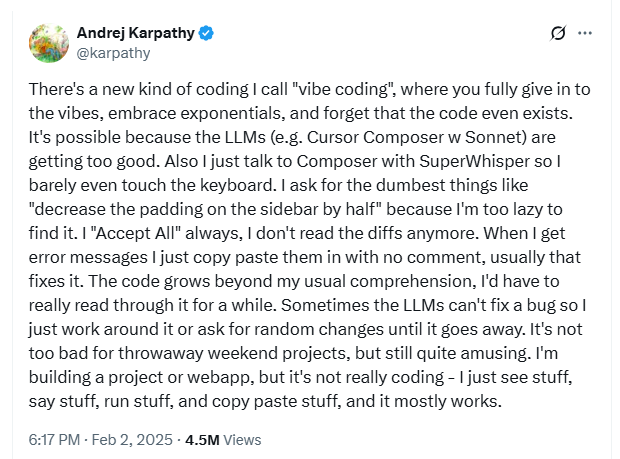

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More ‘Vibe coding’ is a term of the moment, as it refers to a more accepted use of AI and natural language prompts for basic code completion. OpenAI is reportedly looking to get in on the movement — and own more of the full-stack coding experience — as it eyes a $3 billion acquisition of Windsurf (formerly Codeium). If the deal materializes, it would be OpenAI’s most expensive acquisition to date. The news comes on the heels of the company’s release of o3 and o4-mini, which are capable of “thinking with images,” or more intuitively understanding low-quality sketches and diagrams. This development follows the launch of the GPT-4.1 model family. The AI company nobody can stop talking about also recently raised a $40 billion funding round. Industry watchers and insiders have been abuzz about the potential deal, as it could not only make OpenAI an even bigger industry player than it already is, but also further accelerate the cultural adoption of vibe coding. “Windsurf could be game-changing for OpenAI because it is one of the tools that developers are racing to,” Lisa Martin, research director at The Futurum Group, told VentureBeat. “This deal could solidify OpenAI as a developer’s best friend.” A bet on vibe coding? AI-assisted coding isn’t a new concept by a long shot, but “vibe coding” — a term coined by OpenAI cofounder Andrej Karpathy — is a relatively new approach, as it leverages generative AI and natural language prompts to automate coding tasks. This is compared to other AI coding assistants and no-code and low-code tools that use visual drag-and-drop elements. Vibe coding is all about incorporating AI into end-to-end development workflows, with the focus being intent rather than manual coding minutiae. Windsurf is among the top tools in the space, along with Cursor, Replit, Lovable, Bolt, Devin and Aider. The company released Wave 6 earlier this month, which aims to address common workflow bottlenecks. “Windsurf has been leading the charge in building truly AI-native development tools, helping developers accelerate delivery without compromising on experience,” said Mitchell Johnson, chief product development officer at software security firm Sonatype. “Like early open source, this started as ‘outlaw tech’ — but it’s quickly becoming foundational.” Andrew Hill, CEO and co-founder of crowdsource AI agent platform Recall, said the potential acquisition is “a bet on vibe coding as the future of software development.” Windsurf has fast feedback loops, good defaults, and “just the right toggles” for people with the right intuition to guide AI to solve their problems. It is also an environment designed for co-creation. “Let the coding leapfrogging commence from Replit, Claude, Cursor, Windsurf — what’s next?,” said Hill, calling vibe coding a “productivity unlock.” “The best agents will be built by humans who can vibe through a hundred ideas in a weekend.” OpenAI owning more of the stack Others note that if OpenAI does acquire Windsurf, it signals a clear move to own more of the full-stack coding experience rather than just supplying the underlying models. “Windsurf has focused on developer-centric workflows, not just raw code generation, which aligns with the growing need for contextual and collaborative coding tools,” said Kaveh Vahdat, AI industry watcher and founder of RiseAngle and RiseOpp. Arvind Rongala, CEO of corporate training services company Edstellar, called it more of a power move than a software grab. With vibe coding, developers want environments that are “expressive, intuitive and nearly collaborative, rather than merely text editors.” With Windsurf, OpenAI would have direct access to the next generation of code creation and sharing, he noted, with the plan being vertical integration. “The intelligence layer already belongs to OpenAI. It wants the canvas now.” OpenAI has enormous power over not just what is developed, but how it is built, said Rongala, since it owns the creative tools that developers use for hours every day. “This isn’t about taking market share away from Replit or GitHub,” he said. “Making such platforms seem antiquated is the goal.” A strategy move or a scramble? Vahdat pointed out that a Windsurf acquisition would put OpenAI in more direct competition with GitHub Copilot and Amazon CodeWhisperer, both of which are backed by platform giants. “The real value here is not just in the tool itself but in the distribution and user behavior data that comes with it,” he said. “That kind of insight is strategically important for improving AI coding systems at scale.” The move is especially interesting because it could position OpenAI more directly against Microsoft, even though the two are closely partnered through tools like GitHub Copilot, noted Brian Jackson, principal research director at Info-Tech Research Group. A deal would support OpenAI’s “larger strategy of moving beyond simple chat interactions and becoming a tool that helps users take real action and automate everyday workflows,” he said. Still, Sonatype’s Johnson noted, what if Windsurf becomes tightly coupled with OpenAI’s ecosystem? Developers benefit most when tools can integrate freely with the AI models that suit their needs — whether that’s GPT, Claude or open-source. “If ownership limits that flexibility, it could introduce a form of vendor lock-in that slows the very momentum Windsurf helped create,” he said. Some OpenAI critics, meanwhile, see it as a desperate move. Matt Murphy, partner with Menlo Ventures, called Anthropic superior at coding, and the company has the best models and strongest partnerships. “OpenAI’s move here feels like a scramble to close the gap — but it risks alienating key allies and still doesn’t address the core issue: Claude is the better model,” he posited. source

Windsurf: OpenAI’s potential $3B bet to drive the ‘vibe coding’ movement Read More »