TD Securities taps Layer 6 and OpenAI to deliver real-time equity insights to sales and trading teams

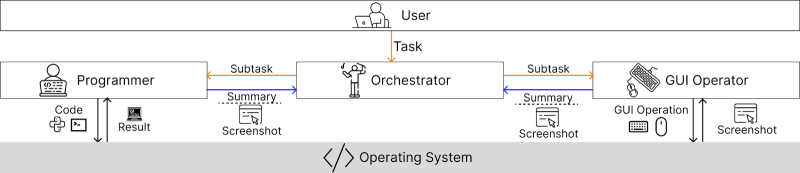

Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now Despite being a highly regulated industry, equity trading has consistently been at the forefront of technological innovations in the financial services sector. However, when it comes to agents and AI applications, many banks have taken a more cautious approach to adoption. TD Securities, the equity and securities trading arm of TD Bank, rolled out its TD AI Virtual Assistant on July 8, aimed toward its front office institutional sales, trading and research professionals to help them manage their workflow. TD Securities CIO Dan Bosman told VentureBeat that the virtual assistant’s primary goal is to help front-office equity sales and traders gain client insights and research. “The first version of this began as a pilot, which we then subsequently scaled,” Bosman said. “It’s really about accessing that equity research data that our analysts put out and bringing it to the hands of the sales team in a way that’s sales-friendly.” AI Scaling Hits Its Limits Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are: Secure your spot to stay ahead: https://bit.ly/4mwGngO Bosman noted that being around a trading floor means being exposed to a lot of the lingo, and the context in which users ask some questions feels very unique. So the AI assistant has to sound natural, intuitive and access the insights generated by traders. Building TD AI Bosman said the idea for the AI assistant came from a member of the equity sales team. Fortunately, the bank has a platform called TD Invent, where employees can bring ideas and the innovation leadership team can evaluate projects responsibly. “Someone in our equity research sales desk came in and pretty much said, I’ve got this idea and brought it to TD Invent,” Bosman said. “What I’ve loved most about this is when you build something super magical, you don’t need to go out and sell or put a face on it. Folks come in and say to us, ‘we want this, we need this or we’ve got ideas,’ and it’s truly the best when we’re able to bring our investment in data, cloud and infrastructure together.” TD Security built the TD AI virtual assistant by leveraging OpenAI’s GPT models. Bosman said TD worked with its technology teams and the Canadian AI company Layer 6, which the bank acquired in 2018, as well as with other strategic partnerships. The assistant integrates with the bank’s cloud infrastructure, allowing it to access internal research documents and market data, such as 13F filings and historical equity data. Bosman calls TDS AI a Knowledge Management System, a term that generally encompasses its ability to retrieve, through retrieval augmented generation (RAG) processes, aggregate and synthesize information into “concise context-aware summaries and insights” so its sales teams can answer client questions. TD AI virtual assistant also gives users access to TD Bank’s foundation model, TD AI Prism. The model, launched in June, is in use throughout the entire bank and not just for TD Securities. During the launch, the bank said TD AI Prism will improve the predictive performance of TD Bank’s applications by processing 100 times more data, replacing its single-architecture models and ensuring customer data stays internal. “The development posed unique challenges, as gen AI was relatively new to the organization when the initiative began, requiring careful navigation of governance and controls,” Bosman said. “Despite this, the project successfully brought together diverse teams across the enterprise, fostering collaboration to deliver a cutting-edge solution.” He added that one of the standout features is its text-to-SQL capability, which converts natural language prompts into SQL queries. To train the assistant, Bosman said TD Securities developed optimizations to make the process easier. “With patent-pending optimizations in prompt engineering and dynamic few-shot examples retrieval, we successfully achieved the business’s desired performance through context learning,” Bosman said. “As a result, fine-tuning the underlying OpenAI model was not required for interacting with both unstructured as well as tabular datasets.” Banks slowly entering the agentic era TD Bank and TD Securities, of course, are not the only banks interested in expanding from assistants to AI agents. BNY told VentureBeat that it began offering multi-agent solutions to its sales teams to help answer customer questions, such as those related to foreign currency support. Wells Fargo also saw an increase in the usage of its internal AI assistant. For its auto sales customers, Capital One built an agent that helps them sell more cars. Many of these use cases emerged after months of pilot testing, as is the case in every other industry; however, financial institutions have the additional burden of strict customer data privacy and fiduciary responsibilities. TD Securities’ Bosman noted that many employees, even on the bank’s business side, are increasingly familiar with tools like ChatGPT. The challenge with pilot testing assistants and agents lies less in teaching them about the tools, but in establishing best practices for using the assistants, integrating them into existing workflows, understanding their limitations and how humans can provide feedback to mitigate hallucinations. Eventually, Bosman said the assistant would evolve into something even its users outside of the bank would want to use when interacting with TD Securities. “My vision is that we see AI as something that can add value to us, but also to external customers at the bank. Right now, it’s a massive opportunity for us around driving a stronger client experience and delivering a better colleague experience,” Bosman said. source