Ex-OpenAI CTO Mira Murati unveils Thinking Machines: A startup focused on multimodality, human-AI collaboration

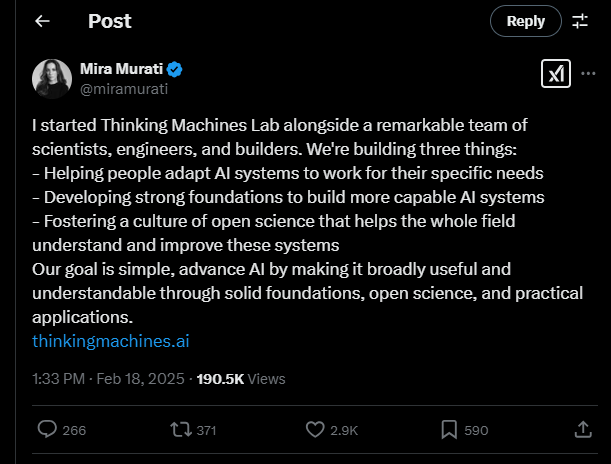

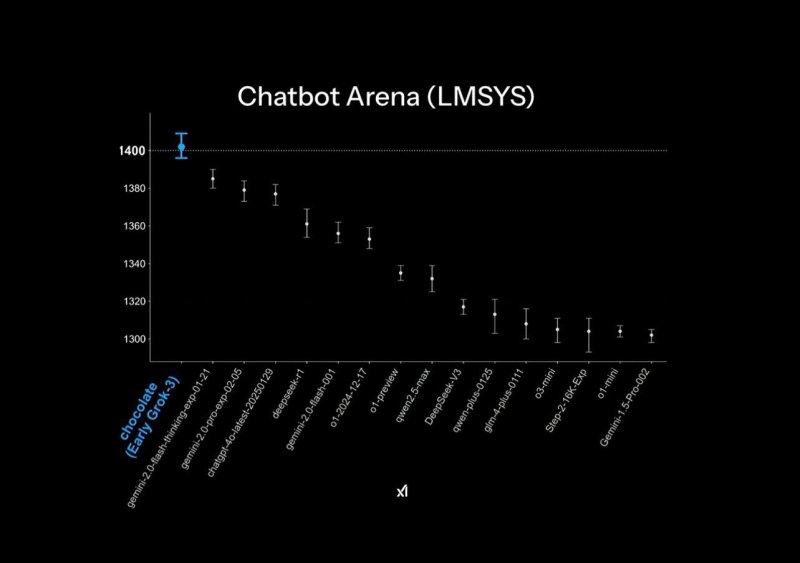

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Ever since Mira Murati departed OpenAI last fall, many have wondered about the former CTO’s next move. Well, now we know (or at least have a rough idea). Murati took to X today to announce her new venture Thinking Machines Lab, an AI research and product company with a goal to “advance AI by making it broadly useful and understandable through solid foundations, open science and practical applications.” Murati posted: “We’re building three things: Helping people adapt AI systems to work for their specific needs; Developing strong foundations to build more capable AI systems; Fostering a culture of open science that helps the whole field understand and improve these systems.” Thinking Machinea’ team of roughly two dozen engineers and scientists is stacked with other OpenAI alums — including cofounder and deep reinforcement learning pioneer John Schulman and ChatGPT co-creator Barret Zoph — which could position the startup to make significant strides in AI research and development. As of the posting of this article, the company’s newly-launched X account @thinkymachines had already amassed roughly 14,000 followers. Model intelligence, multimodality, strong infrastructure Thinking Machines — not to be mistaken with the now defunct supercomputer and AI firm of the 1980s — isn’t yet offering specific examples of intended projects, but suggests a broad focus on multimodal capabilities, human-AI collaboration (as opposed to purely agentic systems) and strong infrastructure. The goal is to build more “flexible, adaptable and personalized AI systems,” the company writes on its new website. Multimodality is “critical” to the future of AI, Thinking Machines says, as it allows for more natural and efficient communication that captures intent and supports deeper integration. “Instead of focusing solely on making fully autonomous AI systems, we are excited to build multimodal systems that work with people collaboratively,” the company writes. Going forward, the startup will build models “at the frontier of capabilities” in areas including science and programming. Model intelligence will be key, as will infrastructure quality. “We aim to build things correctly for the long haul, to maximize both productivity and security, rather than taking shortcuts,” Thinking Machines writes. Calling scientific progress a “collective effort,” the company says it will collaborate with the AI community and publish technical blog posts, papers and code. It will also take an “empirical and iterative” approach to AI safety, and pledges to maintain a “high safety bar” to prevent misuse, perform red-teaming and post-deployment monitoring and share best practices, datasets, code and model specs. Expanding on a decorated team Thinking Machines boasts an impressive team of scientists, engineers and builders behind top AI models including ChatGPT, Character AI and Mistral, as well as popular open-source projects PyTorch, the OpenAI Gym Python library, the Fairseq sequence modeling toolkit and Meta AI’s Segment Anything. The startup is looking to expand on that base; it is currently on the lookout for a research program manager, as well as product builders and machine learning (ML) experts. The goal is to put together a “small, high-caliber team” of both PhD holders and the self-taught, the company writes. Those interested should apply here. Another OpenAI competitor? Murati abruptly resigned from OpenAI in September 2024 — following the unexpected departure of other execs including Schulman and cofounder and former chief scientist Ilya Sutskever — after joining the company in 2018 and ascending to CTO in 2022 (the year that brought us the groundbreaking ChatGPT). At the time, she teased on X: “I’m stepping away because I want to create the time and space to do my own exploration.” Her next move had been a topic of speculation given her reputation as a steady operational force during OpenAI’s tumultuous period in late 2023, when the board’s attempted ousting of CEO Sam Altman briefly upended the company. Internally, she was seen as a pragmatic leader who kept OpenAI stable through uncertainty. Her decision to strike out on her own follows a broader shift in the AI research landscape — where the breakneck race to train ever-larger models is giving way to an era of applied AI, agentic systems and real-world execution. Her Thinking Machines announcement comes amid a flurry of new model capabilities and benchmark-shattering. OpenAI continues to make innovative breakthroughs — including its new o3-powered Deep Research mode, which scrolls the web and curates extensive reports — but it also faces strong competition in all directions. Just today, for instance, xAI released Grok 3, which rivals GPT-4o’s performance. With OpenAI cofounder Ilya Sutskever launching Safe Superintelligence and other industry veterans charting their own paths, the question now is where Thinking Machines will place its bets in this evolving landscape. source