Lightning AI’s AI Hub shows AI app marketplaces are the next enterprise game-changer

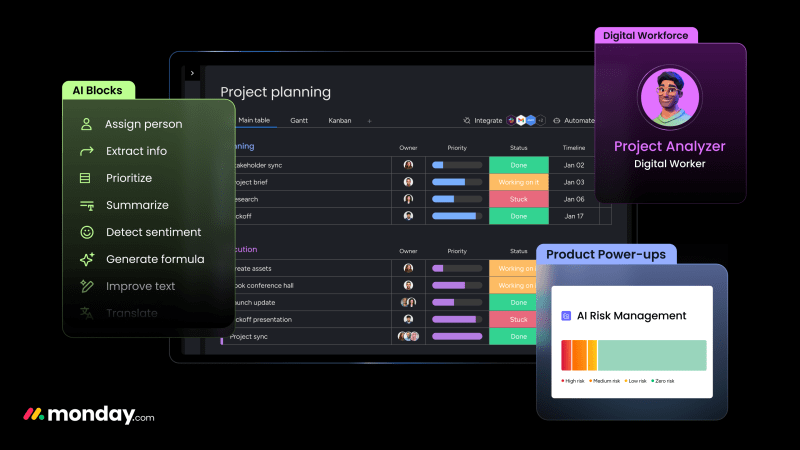

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More The last mile problem in generative AI refers to the ability of enterprises to deploy applications to production. For many companies, the answer lies in marketplaces, which enterprises and developers can browse for applications akin to the Apple app store and download new programs onto their phones. Providers such as AWS Bedrock and Hugging Face have begun building marketplaces, offering ready-built applications from partners that customers can integrate into their stack. The latest entrant into the AI marketplace space is Lightning AI, the company that runs the open-source Python library PyTorch Lightning. Today it is launching AI Hub, a marketplace for both AI models and applications. What sets it apart from other marketplaces, however, is that Lightning allows allows developers to actually do deployment — and enjoy enterprise security too. Lightning AI CEO William Falcon told VentureBeat in an exclusive interview that AI Hub allows enterprises to find the application they want without having all the other platforms required to run it. Falcon noted that previously, enterprises had to find hardware providers that could run and host models. The next step was to find a way to deploy that model and make it into something useful. “But then you need those models to do something, and that’s where the last mile issue is, that’s the end thing enterprises use, and most of that is from standalone companies that offer an app,” he said. “They bought all these tools, did a bunch of experiments, and then couldn’t deploy them or really take them to that last mile.” Falcon added that AI Hub “removes the need for specialized platforms.” Enterprises can find any type of AI application they want in one place. This helps organizations stuck in the prototype phase move faster to deployment. AI Hub as an app store AI Hub hosts more than 50 APIs at launch, with a mix of foundation models and applications. It hosts many popular models, including DeepSeek-R1. Enterprises can access AI Hub and find applications built using Lightning’s flagship product, Lightning AI Studio, or by other developers. They can then run these on Lightning’s cloud or private enterprise cloud environments. Organizations can link their AWS or Google Cloud instances and keep data within their company’s virtual private cloud. Falcon said this offers enterprises control over deployment. Lightning AI’s AI Hub can work with most cloud providers. While it hosts open-source models, Falcon said the apps it hosts are not open-source, meaning users cannot alter their code. Lightning AI will offer AI Hub free for current customers, with 15 monthly credits to run applications. It will offer different pricing tiers for enterprises that want to connect to their private clouds. Falcon said AI Hub speeds up the deployment of AI applications within an organization because everything they need is on the platform. “Ultimately, as a platform, what we offer enterprises is iteration and speed,” he said. “I’ll give you an example: We have a Big Fortune 100 pharma company customer. Within a few days of when DeepSeek came out, they had it in production, already running.” More AI marketplaces Lightning AI’s AI Hub is not the first AI app marketplace, but its launch indicates how fast the enterprise AI space has moved since the launch of ChatGPT, which powered a generative AI boom in enterprise technology. API marketplaces still offer tons of SaaS applications to enterprises, and more companies are beginning to provide access to AI-powered applications like Apple’s App Store to make them easier to deploy. AWS, for instance, announced the AWS Bedrock Marketplace for specialized foundation models and Buy with AWS — which features services from AWS partners — during re:Invent in December. Hugging Face, for its part, has launched Spaces, an AI app directory that allows developers to search and try out new apps, for general availability. Hugging Face CEO Clement Delangue posted on X that Spaces “has quietly become the biggest AI app store, with 400,000 total apps, 2,000 new apps created every day, getting visited 2.5M times every week!” He added that the launch of Spaces shows how “The future of AI will be distributed.” Even OpenAI’s GPT Store on ChatGPT technically functions as a marketplace for people to try out custom GPTs. https://twitter.com/_akhaliq/status/1886831521216016825 Falcon noted that most technologies are offered in a marketplace, especially to reach many potential customers. In fact, this is not the first time Lightning AI has launched an AI marketplace. Lightning AI Studio, first announced in December 2023, lets enterprises create AI platforms using pre-built templates. “Every technology ends up here,” said Falcon. “Through the evolution of any technology, you’re going to end up in something like this. The iPhone’s a good example. You went from point solutions to calculators. flashlights and notepads. Something like Slack did the same thing where you had an app to send files or photos before, but now it’s all in one. There hasn’t really been that for AI because it’s still kind of new.” Lightning AI, though, faces tough competition especially against Hugging Face. Hugging Face has long been a repository of models and applications and is widely used by developers. Falcon said what makes AI Hub different is that users not only access to state of the art applications with powerful models, but it allows them to begin their AI deployment in the platform and focus on enterprise security. “I can hit deployment here. As an enterprise they can point to their AWS or Google Cloud and the application runs in their private cloud. No data leaks or security issues it’s all within your firewall,” he said. source

Lightning AI’s AI Hub shows AI app marketplaces are the next enterprise game-changer Read More »