Google launches Gemini 2.0 Pro, Flash-Lite and connects reasoning model Flash Thinking to YouTube, Maps and Search

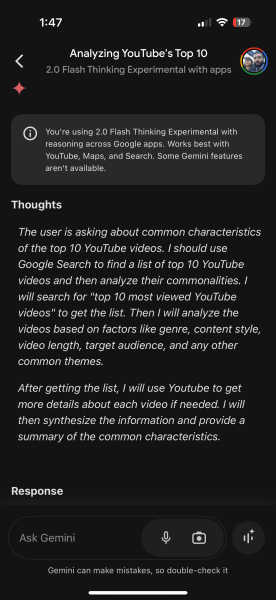

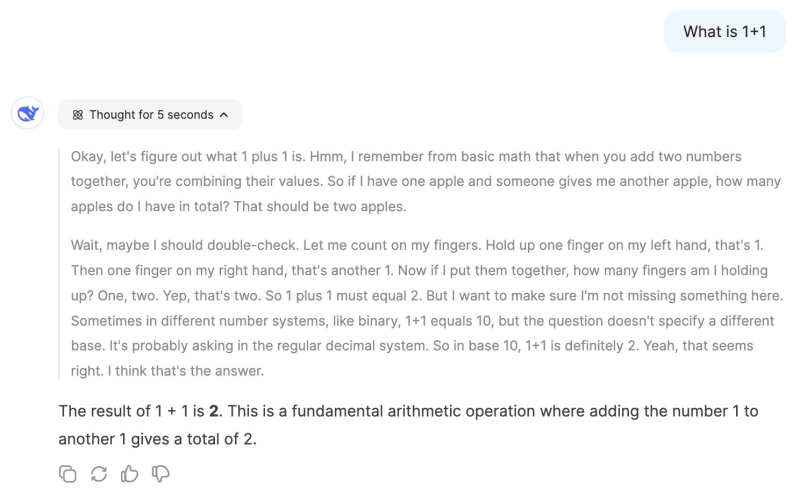

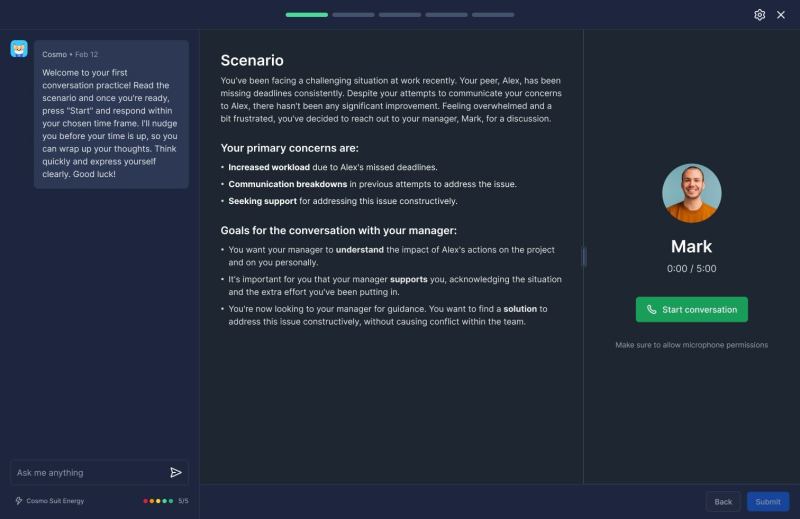

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Google’s Gemini series of AI large language models (LLMs) started off rough nearly a year ago with some embarrassing incidents of image generation gone awry, but it has steadily improved since then, and the company appears to be intent on making its second-generation effort — Gemini 2.0 — the biggest and best yet for consumers and enterprises. Today, the company announced the general release of Gemini 2.0 Flash, introduced Gemini 2.0 Flash-Lite, and rolled out an experimental version of Gemini 2.0 Pro. These models, designed to support developers and businesses, are now accessible through Google AI Studio and Vertex AI, with Flash-Lite in public preview and Pro available for early testing. “All of these models will feature multimodal input with text output on release, with more modalities ready for general availability in the coming months,” Koray Kavukcuoglu, CTO of Google DeepMind, wrote in the company’s announcement blog post — showcasing an advantage Google is bringing to the table even as competitors such as DeepSeek and OpenAI continue to launch powerful rivals. Google plays to its multimodal strenghts Neither DeepSeek-R1 nor OpenAI’s new o3-mini model can accept multimodal inputs — that is, images and file uploads or attachments. While R1 can accept them on its website and mobile app chat, The model performs optical character recognition (OCR) a more than 60-year-old technology, to extract the text only from these uploads — not actually understanding or analyzing any of the other features contained therein. However, both are a new class of “reasoning” models that deliberately take more time to think through answers and reflect on “chains-of-thought” and the correctness of their responses. That’s opposed to typical LLMs like the Gemini 2.0 pro series, so the comparison between Gemini 2.0, DeepSeek-R1 and OpenAI o3 is a bit of an apples-to-oranges. But there was some news on the reasoning front today from Google, too: Google CEO Sundar Pichai took to the social network X to declare that the Google Gemini mobile app for iOS and Android has been updated with Google’s own rival reasoning model Gemini 2.0 Flash Thinking. The model can be connected to Google Maps, YouTube and Google Search, allowing for a whole new range of AI-powered research and interactions that simply can’t be matched by upstarts without such services like DeepSeek and OpenAI. I tried it briefly on the Google Gemini iOS app on my iPhone while writing this piece, and it was impressive based on my initial queries, thinking through the commonalities of the top 10 most popular YouTube videos of the last month and also providing me a table of nearby doctors’ offices and opening/closing hours, all within seconds. Gemini 2.0 Flash enters general release The Gemini 2.0 Flash model, originally launched as an experimental version in December, is now production-ready. Designed for high-efficiency AI applications, it provides low-latency responses and supports large-scale multimodal reasoning. One major benefit over the competition is in its context window, or the number of tokens that the user can add in the form of a prompt and receive back in one back-and-forth interaction with an LLM-powered chatbot or application programming interface (API). While many leading models, such as OpenAI’s new o3-mini that debuted last week, only support 200,000 or fewer tokens — about the equivalent of a 400 to 500 page novel — Gemini 2.0 Flash supports 1 million, meaning it is is capable of handling vast amounts of information, making it particularly useful for high-frequency and large-scale tasks. Gemini 2.0 Flash-Lite arrives to bend the cost curve to the lowest yet Gemini 2.0 Flash-Lite, meanwhile, is an all-new LLM aimed at providing a cost-effective AI solution without compromising on quality. Google DeepMind states that Flash-Lite outperforms its full-size (larger parameter-count) predecessor, Gemini 1.5 Flash, on third-party benchmarks such as MMLU Pro (77.6% vs. 67.3%) and Bird SQL programming (57.4% vs. 45.6%), while maintaining the same pricing and speed. It also supports multimodal input and features a context window of 1 million tokens, similar to the full Flash model. Currently, Flash-Lite is available in public preview through Google AI Studio and Vertex AI, with general availability expected in the coming weeks. As shown in the table below, Gemini 2.0 Flash-Lite is priced at $0.075 per million tokens (input) and $0.30 per million tokens (output). Flash-Lite is positioned as a highly affordable option for developers, outperforming Gemini 1.5 Flash across most benchmarks while maintaining the same cost structure. Logan Kilpatrick highlighted the affordability and value of the models, stating on X: “Gemini 2.0 Flash is the best value prop of any LLM, it’s time to build!” Indeed, compared to other leading traditional LLMs available via provider API, such as OpenAI 4o-mini ($0.15/$0.6 per 1 million tokens in/out), Anthropic Claude ($0.8/$4! per 1M in/out) and even DeepSeek’s traditional LLM V3 ($0.14/$0.28), in Gemini 2.0 Flash appears to be the best bang for the buck. Gemini 2.0 Pro arrives in experimental availability with 2-million token context window For users requiring more advanced AI capabilities, the Gemini 2.0 Pro (experimental) model is now available for testing. Google DeepMind describes this as its strongest model for coding performance and the ability to handle complex prompts. It features a 2 million-token context window and improved reasoning capabilities, with the ability to integrate external tools like Google Search and code execution. Sam Witteveen, co-founder and CEO of Red Dragon AI and an external Google developer expert for machine learning who often partners with VentureBeat, discussed the Pro model in a YouTube review. “The new Gemini 2.0 Pro model has a two-million-token context window, supports tools, code execution, function calling and grounding with Google Search — everything we had in Pro 1.5, but improved.” He also noted of Google’s iterative approach to AI development: “One of the key differences in Google’s strategy is that they release experimental versions of models before they go GA (generally accessible), allowing for rapid iteration based