DataRobot launches Enterprise AI Suite to bridge gap between AI development and business value

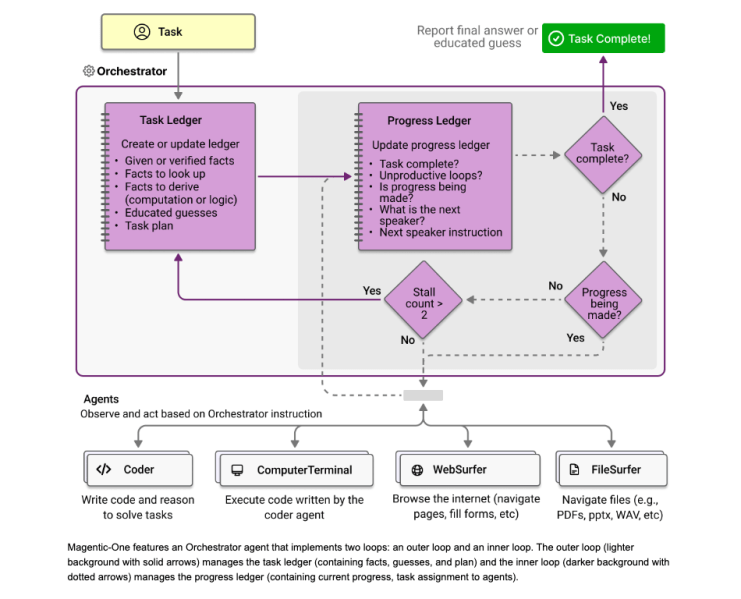

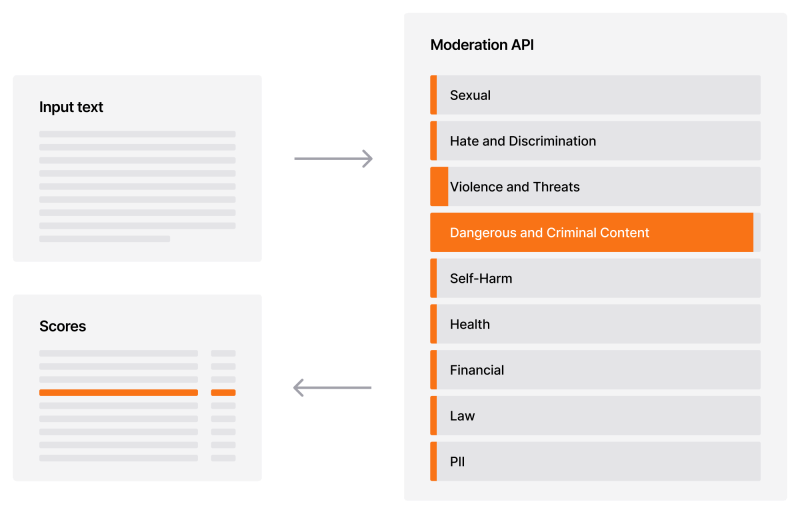

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More As enterprises worldwide pour resources into AI efforts, many struggle to convert their technological investments into measurable business outcomes. That’s the challenge that DataRobot is looking to solve with a series of new product updates announced today. DataRobot is not new to the AI space, in fact the company has been in business for 12 years, well before the current generative AI boom. A core focus for the company since inception has been enabling predictive analytics to help improve business outcomes. Like many others in recent years, DataRobot has turned its attention to gen AI support. With the new Enterprise AI Suite, announced today, DataRobot is looking to go further and differentiate itself in an increasingly crowded market. The new integrated platform promises to enable enterprises to start solving business problems with AI out-of-the-box, rather than having to piece together multiple services. The platform is designed to work across multiple cloud environments as well as on-premises, giving customers more flexibility. The Enterprise AI Suite is a comprehensive platform that helps enterprises build, deploy and manage both predictive and generative AI applications while ensuring proper governance and safety controls. DataRobot’s focus is on creating tangible business value from AI, rather than just providing the technology. “How do you take AI to the next level in terms of value creation? I tell people that customers don’t eat models for breakfast,” Debanjan Saha, CEO of DataRobot, told VentureBeat. “You need to build applications and agents, and not only that, you have to integrate them into their business fabric in order to create value. That’s what this release is all about.” Addressing the challenges of enterprise AI implementation According to recent DataRobot research, 90% of AI projects fail to move from prototype to production. “Just training models does not create any enterprise value,” Saha said. The new DataRobot Enterprise AI Suite introduces application templates that provide immediate functionality while maintaining customization flexibility. This approach addresses a common market gap between inflexible off-the-shelf AI applications and resource-intensive custom development. Saha explained that the templates are designed to be horizontal, meaning they can be applied across different industries, rather than being vertically-specific. While the templates provide a starting point, enterprises have the ability to customize them to their specific needs. This includes: Changing the data sources, adjusting model parameters, modifying the user interface and integrating the applications with other systems in a technology stack. Unifying predictive and generative AI A key differentiator for DataRobot’s platform is its unified approach to both traditional predictive AI and gen AI capabilities. The platform allows organizations to extend foundation models with enterprise data while implementing necessary safety controls. DataRobot’s Enterprise AI’s suite supports a full Retrieval Augmented Generation (RAG) pipeline to help extend foundation models like Llama 3 and Gemini with enterprise data. One of the new templates combines both technologies for enhanced business outcomes. As a potential use case, Saha said for example an enterprise could use the predictive model to predict which customer is going to churn, when they are going to churn and why they are going to churn. Data from that predictive model can then be used with a gen AI model to create a hyper personalized next best offer email campaign. The DataRobot platform includes built-in safeguards for both predictive and generative models. “These models have all sorts of issues with respect to accuracy, with respect to leaking privacy, or private or secure data,” Saha noted. “So there are a whole bunch of guard models that you want to put around them.” Advanced Agentic AI brings new reasoning to enterprise use cases Another standout feature in the new DataRobot platform is the integration of AI agent capabilities. The agentic AI approach is designed to help organizations handle complex business queries and workflows. The system employs specialist agents that work together to solve multi-faceted business problems. This approach is particularly valuable for organizations dealing with complex data environments and multiple business systems. “You ask a question to your agentic workflow, it breaks up the questions into a set of more specific questions, and then it routes them to agents which are specialists in various different areas,” Saha explained. For instance, a business analyst’s question about revenue might be routed to multiple specialized agents – one handling SQL queries, another using Python – before combining results into a comprehensive response. Observability and governance are the keys to enterprise AI success As part of the DataRobot updates the company is also rolling out a new observability stack. The new observability capabilities provide detailed insights into AI system performance, especially for RAG implementations. For example, Saha explained that an organization might have a corpus of enterprise data. The organization is using some kind of chunking and embedding model, mapping it to a vector database and then putting an LLM in front of it. What happens if the responses aren’t what the organization expects? That’s where observability fits in. The platform offers advanced visualization and analytical tools to diagnose such issues. “We have put together a lot of instrumentation which lets people visually understand, for example, if you have a lot of clustering of data in the vector database, you can get a spurious answer,” Saha said. “You would be able to see that, if you see your questions are landing in areas where you don’t have enough information.” This observability extends to the platform’s governance capabilities, with real-time monitoring and intervention features. The system can automatically detect and handle sensitive information, with customizable rules for different scenarios. “We are really excited about what we call AI that makes business sense,” Saha said. “DataRobot has always been very good at focusing on creating business value from AI – it’s not technology for the sake of technology.” source