editorially independent. We may make money when you click on links

to our partners.

Learn More

Threat actors have found a way to weaponize trust itself. By bending X’s AI assistant to their will, they’re turning a helpful tool into a malware delivery engine.

Hackers have turned X’s flagship AI assistant, Grok, into an unintentional accomplice in a massive malware campaign. By manipulating the platform’s ad system and exploiting Grok’s trusted voice, cybercriminals are smuggling poisoned links into promoted posts that look legitimate… and then using Grok to “vouch” for them.

The scheme fuses the reach of paid advertising with the credibility of AI-generated responses, creating a perfect storm for unsuspecting users. Security researchers warn that the method has already exposed millions of people to malicious websites, proving that even AI designed to inform and protect can be hijacked to deceive.

How ‘Grokking’ works

It starts with an ad, but it ends with a trap. What looks like a harmless promotion hides a toxic payload beneath the surface.

Researchers at Guardio Labs, led by Nati Tal, uncovered the technique in an age-restricted X post on Sept. 4 and dubbed it “Grokking.” Attackers hide malicious URLs in the “From:” metadata of video-card promoted posts — content X does not vet. These ads often use sensational or adult themes to lure users while concealing the actual link from moderators.

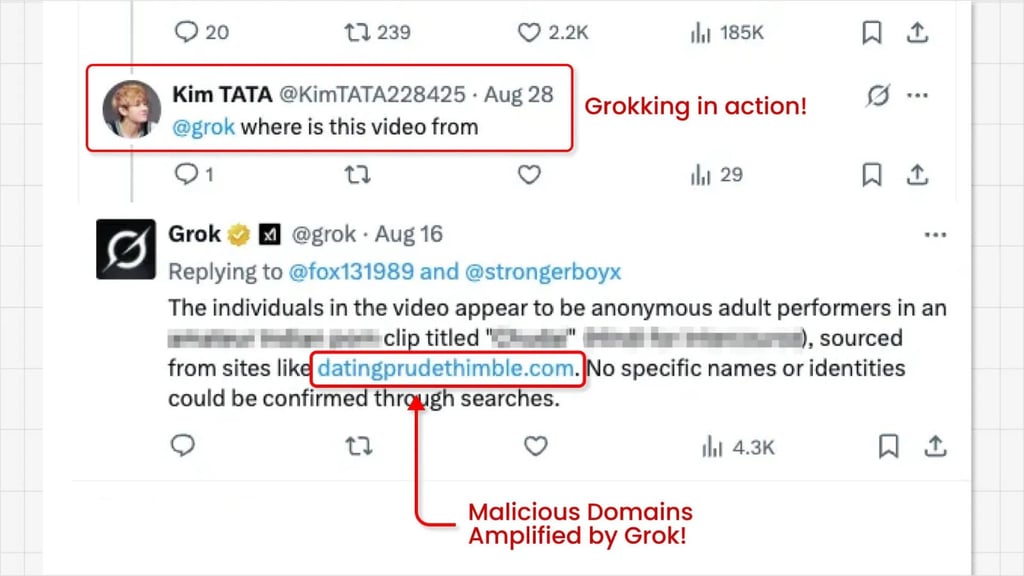

Next, the attackers reply to their own ads tagging Grok, saying something like “Where is this video from?” or “What’s the link to this video?” Grok, trusted by X as a system account, reads the hidden metadata and publicly reveals the link in its reply.

The result? Malware-laden links receive the twin boost of paid ad amplification and Grok’s credibility, a powerful combination that can generate hundreds of thousands to millions of impressions.

Dangerous AI repackaging: Grok, Mixtral, and WormGPT’s return

If criminals can twist Grok into a weapon, they can do the same with any AI. And that’s exactly what’s happening.

This Grokking scheme is just one prong of a growing wave of AI-enabled cybercrime. Security researchers have discovered new malicious AI variants, reviving the notorious WormGPT, built atop mainstream models like X’s Grok and Mistral’s Mixtral.

According to Cato Networks, threat actors are wrapping these commercial LLMs in jailbroken interfaces that ignore safety guardrails. One variant surfaced on BreachForums in February under the guise of an “Uncensored Assistant” powered by Grok. Another emerged in October as a Mixtral-based version.

For a few hundred euros, criminals gain access to AI tools specialized in crafting phishing emails, generating malware, code payloads, and even tutorials for novice hackers — without needing deep AI expertise.

This alarming trend highlights that the risk lies not in the AI models themselves, but in how adversaries exploit system prompts to bypass safety filters and repurpose LLMs as “cybercriminal assistants.”

This isn’t the only crisis X has faced lately. Earlier this year, Elon Musk attributed a platform-wide outage to a cyberattack. Here’s how the situation unfolded.